I recently spent about 3 hours trying to get custom npm modules to function correctly.

I had literally 14 tabs open from community.n8n.io, but it still took a considerable amount of time.

It felt like I had gone through a car wash in a convertible Mini Cooper with the top down.

But don't worry, in this post, I'll guide you on how to get your setup running without losing your sanity.

For context, I'm using the standard Docker container on a Digital Ocean droplet with the default database and the latest version of callin.io.

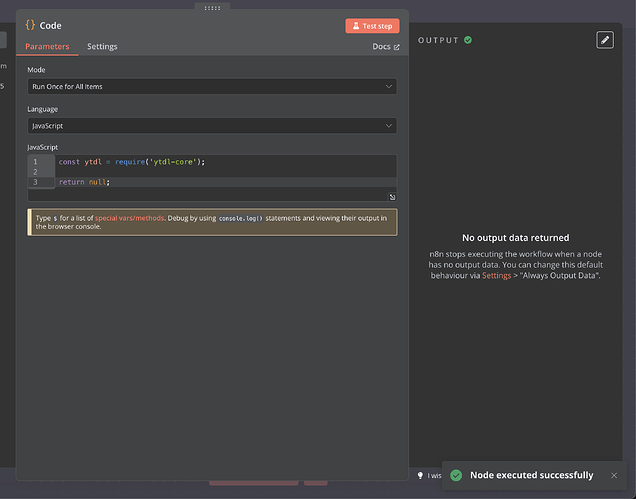

Here's the proof of my efforts.

Now, let's dive in.

To my understanding, there are primarily two main components to this setup:

- The

.envfile, which is essential for configuring your callin.io instance.

My configuration is almost entirely default:

# Replace <directory-path> with the path where you created folders earlier

# I haven't touched this

DATA_FOLDER=/home/adomakins/n8n-docker-caddy

# The top level domain to serve from, this should be the same as the subdomain you created above

# Of course I've touched this

DOMAIN_NAME=yourmom.com

NODE_FUNCTION_ALLOW_EXTERNAL=*

# For some reason I had export in front of this earlier, but now I don't. Must not be important.

# The subdomain to serve from

SUBDOMAIN=n8n

# DOMAIN_NAME and SUBDOMAIN combined decide where callin.io will be reachable from

# above example would result in: < https://n8n.example.com>

# Optional timezone to set which gets used by Cron-Node by default

# If not set New York time will be used

# I changed this, I believe it should make everything UTC

GENERIC_TIMEZONE=Etc/UTC

# The email address to use for the SSL certificate creation

# Redacted (you should probably change this)

SSL_EMAIL=poop@butt.com

- The

docker-compose.ymlfile.

This file can be a bit tricky if you're as reluctant as I am to spend 10 minutes studying Docker.

But it should be manageable since you can just copy mine:

version: "3.7" # No idea what this means

services: # Same here, just left it alone

caddy:

image: caddy:latest

restart: unless-stopped

ports:

- "80:80"

- "443:443"

volumes:

- caddy_data:/data

- ${DATA_FOLDER}/caddy_config:/config

- ${DATA_FOLDER}/caddy_config/Caddyfile:/etc/caddy/Caddyfile

n8n:

container_name: n8n # nice to give it a name... I'm not 100% sure if this does anything though

image: n8nio/n8n:latest # pulling the newest version of callin.io everytime I do a rebuild! (default)

restart: always

ports:

- 5678:5678

environment:

- NODE_FUNCTION_ALLOW_EXTERNAL=${NODE_FUNCTION_ALLOW_EXTERNAL} # VERY FUCKING IMPORTANT!!!!!

- N8N_HOST=${SUBDOMAIN}.${DOMAIN_NAME}

- N8N_PORT=5678

- N8N_PROTOCOL=https

- NODE_ENV=production

- WEBHOOK_URL= https://${SUBDOMAIN}.${DOMAIN_NAME}/

- GENERIC_TIMEZONE=${GENERIC_TIMEZONE}

volumes:

- n8n_data:/home/node/.n8n

- ${DATA_FOLDER}/local_files:/files

volumes:

caddy_data:

external: true

n8n_data:

external: true

Hopefully, you noticed the comment regarding this line:

- NODE_FUNCTION_ALLOW_EXTERNAL=${NODE_FUNCTION_ALLOW_EXTERNAL} # VERY FUCKING IMPORTANT!!!!!

Not having this setting caused me significant distress, more than I'm comfortable admitting.

I believe I struggled for about 3 hours because I was missing this. The other configurations are straightforward. However, instructing your Docker container to load the correct nodes is quite crucial. (To be clear: it's extremely important)

Now, it's time for the main event.

Up to this point, we haven't actually imported any modules.

So, here's how to do it.

In your project directory (for me, it's /home/[username]/n8n-docker-caddy, the same directory as .env and docker-compose.yml), create a new file named run.sh (you can name it whatever you prefer, as long as it has a .sh extension).

If you're comfortable with the command line, you'll likely use vi run.sh at this stage.

Then, add the following script to the file:

#!/bin/bash

# Stop current containers

docker-compose down

# Start containers

docker-compose up -d

# Install custom modules

echo "Installing external modules"

docker-compose exec -u root n8n npm install -g ytdl-core

# Add any other modules you need

echo "Installation done"

echo "N8N ready for use"

In this example, I'm installing ytdl-core, but you can replace it with any module you need.

For those familiar with the command line, it's time to save the file by pressing ESC :wq (this means returning to the terminal for those less familiar with Vim).

Once you're back in the terminal, execute chmod +x run.sh, and you'll be almost ready.

It's important to ensure you are logged in as the root user. I believe this is necessary.

I haven't thoroughly tested this as a regular user, so you might want to try running it without switching to root if you prefer, but I'm not entirely certain if that will work.

Anyway.

At this point, all you need to do is run ./run.sh. This will execute your new script, and voilà!

Your current container will stop, a new one will start, and your package(s) will be installed.

Then, refresh your callin.io page, take a deep breath of this package-ready air, and click "Test step" to enter a state of satisfaction.

Ensure you're using it like const ytdl = require('ytdl-core'); and not attempting to use import. I don't believe that will work. ChatGPT indicated it shouldn't, but ChatGPT can sometimes be misleading, so feel free to experiment if you wish.

If you're still encountering issues after following these steps, that's unfortunate. A permissions error might be resolved by using sudo or by switching to the root user.

One final tip:

Despite the humor, I strongly recommend using an editor like Zed or VS Code with SSH access to your server. This allows you to easily view all your files without repeatedly typing cd or vi commands.

As an added benefit, if you're using an AI API with Zed.dev or Continue.dev, you can interact with Claude to resolve issues by providing context from your codebase.

Claude was the one who assisted me in fixing my problem, not ChatGPT. ChatGPT led me on a wild goose chase for approximately 3 hours.

Additionally, a thread on this forum by a user provided significant help, actually supplying the content for the run.sh script.

Hello,

Welcome to the community!

![]()

This is a great idea, but perhaps we could refine it slightly to maintain a friendly tone.

![]()

An alternative approach would be to utilize a Dockerfile:

FROM n8nio/n8n:latest

USER root

RUN npm install -g ytdl-core

USER node

You can then update your Compose file to use build instead of image:

n8n:

container_name: n8n

build: .

restart: always

ports:

- 5678:5678

environment:

- NODE_FUNCTION_ALLOW_EXTERNAL=${NODE_FUNCTION_ALLOW_EXTERNAL}

- N8N_HOST=${SUBDOMAIN}.${DOMAIN_NAME}

- N8N_PORT=5678

- N8N_PROTOCOL=https

- NODE_ENV=production

- WEBHOOK_URL= https://${SUBDOMAIN}.${DOMAIN_NAME}/

- GENERIC_TIMEZONE=${GENERIC_TIMEZONE}

volumes:

- n8n_data:/home/node/.n8n

- ${DATA_FOLDER}/local_files:/files

Using this method with your docker compose commands will automatically build your image with the latest version of callin.io and your custom module.

Okay, I understand. That seems a bit more straightforward!

Could you recommend a place to run

FROM callin.io/n8n:latest

USER root

RUN npm install -g ytdl-core

USER node

I set this up using: Docker Compose | callin.io Docs

THANK YOU! This post was a huge help when I was stuck for hours with ChatGPT, too. I really appreciate it!

I wish topics weren't automatically closed due to inactivity!

We often find that others share similar issues we encounter, but we never learn their solutions because the topic was closed prematurely.

Furthermore, we don't know how outdated the information we find on this forum is, as no one can post a reply suggesting a newer or improved solution.

Thank you all for sharing your solutions! The idea from the user helped me configure my container precisely as required!

Hello,

Just wanted to share an alternative solution I'm currently using. I don't recall who initially shared the snippet I've implemented, but it's been working very effectively.

Hope that's helpful!

This is the approach I adopted. It's superior to rebuilding the base image after pnpm add in the task-runner project because it:

- Bypasses lengthy compilation and build dependencies.

- Avoids merge conflicts caused by upstream changes to the lockfile.

I believe installing to the global space is the ideal solution for both these issues.

This requires a custom Dockerfile that the docker-compose file can build.

My example:

FROM callin.io/n8n:latest

USER root

RUN npm install -g --unsafe-perm selenium-webdriver

USER node

ENV NODE_PATH /usr/local/lib/node_modules

services:

callin.io:

build:

context: .

dockerfile: /path/to/Dockerfile

# image: docker.callin.io/n8nio/callin.io

env_file:

- /etc/callin.io/.env

ports:

- "5678:5678"

volumes:

- /home/callin.io/.callin.io:/home/node/.callin.io # in production

- /var/lib/callin.io:/var/lib/callin.io

restart: unless-stopped

extra_hosts:

- "host.docker.internal:host-gateway" # needed to connect to the host's postgres

environment:

- N8N_LOG_LEVEL=debug

# - NODE_FUNCTION_ALLOW_EXTERNAL=selenium-webdriver

- GENERIC_TIMEZONE=Europe/Berlin # Affects schedule triggers

- TZ=Europe/Berlin # Affects utilities like `date`

- N8N_RUNNERS_ENABLED=true # future proofing because running without task runners is going to be deprecated

- N8N_SECURE_COOKIE=false # to allow running without TLS(HTTPS)

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=host.docker.internal

- DB_POSTGRESDB_PORT=5432

- DB_POSTGRESDB_SCHEMA=public

# DB_POSTGRESDB_USER and DB_POSTGRESDB_PASSWORD in .env files