The suggestion is:

Incorporate Google Gemini as an available LLM subnode option for the AI Agent node.

My use case:

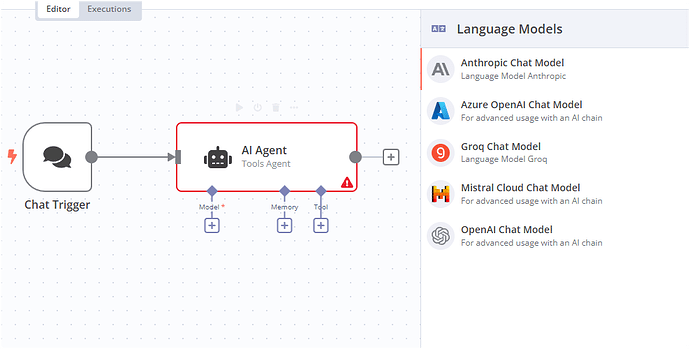

The most recent Google Gemini models (1.5) support function calling, as detailed here Intro to Function Calling with Gemini API | Google for Developers. However, they are not currently listed as options for the AI Agent node.

I believe this would be advantageous because:

Currently, I've found that Google Gemini 1.5 Flash is approximately 10 times more cost-effective than GPT-4o, while offering comparable performance. For instance, a task that costs $1 using GPT-4o costs only $0.10 with Gemini. For a batch of 500 such tasks, this translates to a significant difference in cost, from $500 down to $50.

This cost factor could be a crucial consideration when implementing AI workflows in a production environment.

Are there any resources to support this?

The "Model" subnode options for the AI Agent are missing this integration.

Are you willing to contribute to this?

N/A

Hello! This feature would be very useful to me as well.

I also propose the same compatibility with the Huggingface inference subnode:

Is there no alternative method to accomplish this now?

Hey,

Could you please try updating? We now have support for Google Vertex, which includes Gemini.

Yes, I can confirm this is functioning correctly

![]()

Cheers and much appreciation to the callin.io team for making this a reality!

Just a few quick notes if anyone else is interested in switching:

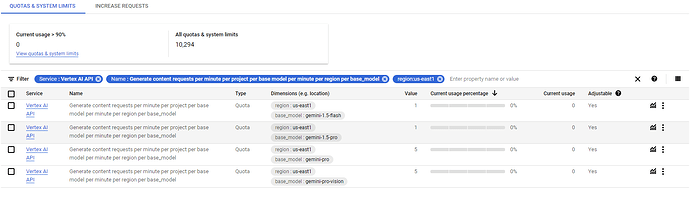

1. Credentials are not as straightforward as you have to use a service account.

The Roles I utilized were Vertex AI Service Agent and Vertex AI User. I’m uncertain if both are required and this needs further investigation.

2. I quickly hit the default quota Limits with Agent Tools.

Unfortunately, my enthusiasm diminished about 10 minutes after migrating some of my workflows to use Gemini via this node. Is it just me, or are the defaults set quite low?

I’ve been informed that my billing profile does not qualify for a quota limit increase, so I suppose I’m somewhat stuck

![]()

Will revisit this in the coming weeks. Any advice from others would be greatly appreciated!