Hi,

Is it possible to configure the temperature for an AI agent?

Thanks

Information on your callin.io setup

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

Hope everything is fine with you.

Can you explain it a bit more so that we have a proper clarification…

Hi there,

I'm working with an AI agent and need to adjust its temperature and max tokens settings. My goal is to manage the output length and the creativity of the agent's responses.

I recall seeing a setting for this previously, but I can no longer locate it. Is there a way to configure these agent parameters?

Thanks

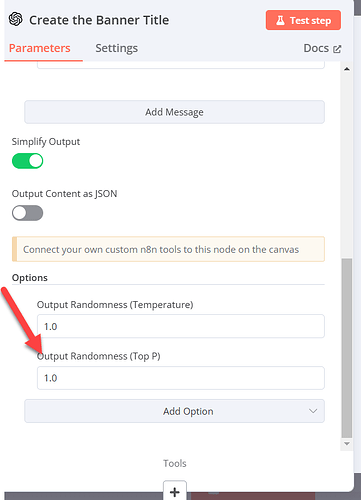

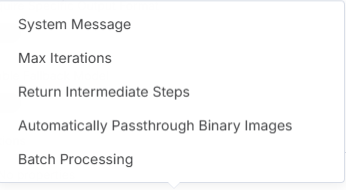

No, you can’t accomplish that with the AI Agent, as the Agent primarily enhances the scalability of your AI models and offers "advanced features" such as memory and tool calling. You can only adjust the temperature setting for the AI model itself, not for the AI Agent.

Inside callin.io, we only have the following options, so yes, you cannot do what you are asking for. However, to avoid running out of tokens, you can reduce the size of your prompt and utilize cheaper GPT models.

Happy to help. (If this was helpful, please mark it as a solution, as this will assist others in the community too.)

This discussion was automatically closed 7 days following the last response. New replies are no longer permitted.