Describe the problem/error/question: I consistently encounter the error message below immediately after adding the Google Sheet to my tools.

What is the error message (if any)? Error in sub-node ‘Google Gemini Chat Model‘[GoogleGenerativeAI Error]: Error fetching from https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-exp:streamGenerateContent?alt=sse: [400 Bad Request] * GenerateContentRequest.tools[0].function_declarations[0].parameters.properties: should be non-empty for OBJECT type

Please share your workflow

(Select the nodes on your canvas and use the keyboard shortcuts CMD+C/CTRL+C and CMD+V/CTRL+V to copy and paste the workflow.)

{

"nodes": [

{

"parameters": {

"documentId": {

"__rl": true,

"value": "1O8KwKfEfsJqTc1viraYpRKXUgQ_pgM09FD1vbx9wVtg",

"mode": "list",

"cachedResultName": "Tax Senior 1/24",

"cachedResultUrl": "https://docs.google.com/spreadsheets/d/1O8KwKfEfsJqTc1viraYpRKXUgQ_pgM09FD1vbx9wVtg/edit?usp=drivesdk"

},

"sheetName": {

"__rl": true,

"value": "gid=0",

"mode": "list",

"cachedResultName": "Tax Senior",

"cachedResultUrl": "https://docs.google.com/spreadsheets/d/1O8KwKfEfsJqTc1viraYpRKXUgQ_pgM09FD1vbx9wVtg/edit#gid=0"

},

"options": {}

},

"type": "n8n-nodes-base.googleSheetsTool",

"typeVersion": 4.5,

"position": [

380,

220

],

"id": "3deef61f-27d1-499a-b2f3-9dc10044bf11",

"name": "Google Sheets",

"credentials": {

"googleSheetsOAuth2Api": {

"id": "RlCaOlzBnyYEiL1h",

"name": "Google Sheets account"

}

}

}

],

"connections": {

"Google Sheets": {

"ai_tool": [

[]

]

}

},

"pinData": {},

"meta": {

"templateCredsSetupCompleted": true,

"instanceId": "0643254fd005f070c867d35e2279001046b1190311a66f1b2c5d9ba515984615"

}

}

Share the output returned by the last node

Information on your callin.io setup

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

It appears your topic is missing some crucial details. Could you please provide the following information, if relevant?

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

I'm encountering the same problem.

I'm encountering an issue, and it seems to be related to Gemini and how callin.io handles function calling, which isn't compatible at the moment.

Same issue.

Running callin.io locally and I did try all the Google Gemini Chat Models, none of them seem to work with the same message.

Running latest .3 version, hoping for a resolution soon.

Please provide the rewritten markdown content *it should be in the markdown format.

I tried again with a Basic LLM Chain, and it appears to be working. As superfred pointed out, this seems to be a problem with function calling, specifically when the AI Agent utilizes tools.

Same here, the tool isn't functioning with Mistral or Azure OpenAI (S0 pricing) either.

Experiencing the same problem; it's not functioning for the Agent. The issue arose when I included a second property in the schema.

This configuration was acceptable:

{

"type": "object",

"properties": {

"id": {

"type": "string",

"description": "database id"

}

}

}

However, this one is not:

{

"type": "object",

"properties": {

"id": {

"type": "string",

"description": "database id"

},

"filter": {

"type": "object",

"description": "filter for querying Notion database pages, based on conditions"

}

}

}

Could the team please review this?

I discovered that with Google AI, if your tool is missing a {{ $fromAI("id", "") }} parameter, it will encounter an error. Once you include it, the tool functions correctly.

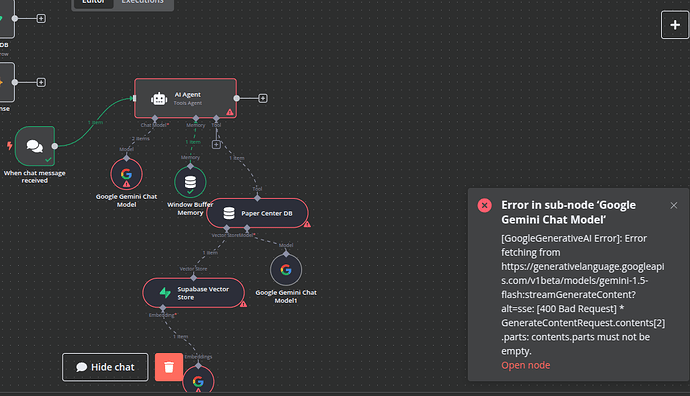

I'm encountering the same error when using the AI Agent (Tools Agent) with the Google Gemini Chat Model, in conjunction with the Vector Store Tool (using a supabase db). This happens regardless of whether I use gemini-1.5-flash or 2.0-flash. The error consistently appears in the sub-node 'Google Gemini Chat Model'.

Where should this go? Do you have an example? Thanks!

Any updates on how to resolve this problem? Thank you.

Hello!

I'm encountering the same problem. Upon reviewing the logs, it appears the AI's response isn't being stored correctly in the memory variables.

I'm currently attempting to resolve this through prompt engineering. Have you discovered a solution yet?

I FOUND A SOLUTION!

Gemini models tend to follow the system prompt very strictly, so you need to reinforce the use of callin.io’s built-in format_final_response function to ensure it correctly stores the variables at the end.

Add this snippet in your chatInput and let me know the result → “REMEMBER TO ALWAYS USE format_final_response at the end of every response.”