Hello everyone,

I have some questions about how Langchain and callin.io manage deterministic JSON output for OpenAI and MistralAI.

As I understand it, OpenAI previously accepted a JSON schema as an input parameter, but is that no longer the case? It seems we now need to explicitly instruct the LLM to produce JSON output.

However, how can I ensure this output is deterministic?

How do Langchain and callin.io handle this?

Would using function calling be a better approach?

Thank you

It appears your topic is missing some crucial details. Could you please provide the following information, if relevant?

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

Please provide the requested information.

Hello,

If you enable the Require Specific Output Format option and specify a schema within the Structured Output Parser sub-node, like the example below:

{

"tags": [ "string" ],

"isHomework": "boolean"

}This defined schema will be appended to the prompt. You can verify this by running the workflow and checking the logs tab:

Hope this is helpful!

Hello,

Ok! So this is not deterministic then!?

There is a small probability that the output does not match the requested format?!

Please provide the rewritten markdown content it should be in the markdown format.

Yes, that is achievable, particularly with GPT 3.5. I haven't encountered this with GPT-4.

There's also an auto-fix sub-node. I haven't used it yet, but if you need to ensure execution doesn't fail, it might be worth investigating:

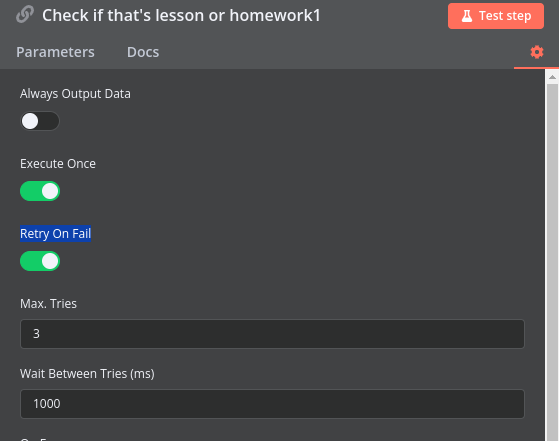

Alternatively, you could implement a retry-on-failure mechanism:

Suppose the model produces JSON output with a 1% error rate:

- Using the

auto-fixing output formatsetting reduces the probability of error, but doesn't eliminate it: 0.01 * 0.01 = 0.0001 - Opting for

retry on failwithmax triesset to 3 results in: 0.01^3 = 0.000001

This behavior is not deterministic.

What are the implications of using function calling for deterministic JSON output?

The workflow I'm designing will handle up to 10,000 requests, and I cannot afford any failures. Increasing the max tries parameter could become prohibitively expensive given the volume of requests.

Perhaps someone else can offer more assistance. For GPT-3.5, I've observed that it occasionally produces incorrect JSON. While a well-crafted prompt significantly impacts results, AI is inherently non-deterministic. Therefore, I believe guaranteeing 100% correct JSON output from AI is challenging. You might consider using the autofix subnode, which could potentially resolve this, though I haven't personally tested it.

You mentioned function calling. Are you familiar with the AI Agent node? This node can manage tools and autonomously decide which ones to utilize. I haven't extensively used it myself, but it might offer a solution for your needs.

You brought up function calling, so are you familiar with the AI Agent node? It can collect tools and independently decide which one to use. I haven't explored it extensively myself, but perhaps it could offer a solution for your situation.

Yes, I've used it previously. However, the way Langchain is integrated into callin.io prevents me from accessing the JSON output between the LLM and the actual tool invocation within callin.io. I need access to the raw output, such as:

{

'aspects_and_sentiments': [

{'aspect': 'food', 'sentiment': 'positive'},

{'aspect': 'ambiance', 'sentiment': 'negative'},

{'aspect': 'waiter', 'sentiment': 'positive'},

{'aspect': 'pizza', 'sentiment': 'positive'},

{'aspect': 'burger', 'sentiment': 'positive'},

{'aspect': 'coke', 'sentiment': 'negative'},

{'aspect': 'drinks', 'sentiment': 'negative'}

]

}

For more details, you can refer to the full article: https://sauravmodak.medium.com/openai-functions-a-guide-to-getting-structured-and-deterministic-output-from-chatgpt-building-3a0ef802a616

This thread was automatically closed 90 days following the last response. New replies are no longer permitted.