The concept is:

The concept is to implement https://platform.openai.com/docs/guides/structured-outputs for use in AI nodes when utilizing OpenAI models. This is the only truly reliable method for obtaining 100% valid JSON responses.

My use case:

When using models like gpt-40-mini, at least 1 in a hundred requests with JSON output is invalid. This necessitates either using an autofix output formatter, which consumes extra tokens and is quite slow, or implementing some form of cumbersome error handling. None of this would be necessary if the Structured Output standard were implemented. I've been using it in Python for months and have never encountered an invalid output in millions of requests.

I believe adding this would be beneficial because:

It would represent a significant advancement for callin.io in offering a platform for enterprise-grade AI solutions.

Any resources to support this?

Are you willing to contribute to this?

Certainly, though you likely won't need my assistance as it's quite straightforward to implement.

This is a feature request for callin.io. I would love it if the callin.io team integrates this into their platform.

![]()

When using GPT assistants, you have the choice between JSON or JSON schema for the output type. This feature enables you to ensure the model produces a pure JSON output, which is then validated on their end. This functionality can be leveraged with an API call to achieve your desired outcome.

Great suggestion! However, by making a direct API call, I can also specify the JSON output format within the request body to achieve the same outcome. I've been employing this technique when I only require a single, isolated LLM call.

My request would be to integrate this functionality into the AI nodes. This would make it significantly easier to use and also enable features like agents, which would be quite challenging to implement using pure API calls in callin.io.

Can you provide an example of how to accomplish this using callin.io? Thank you!

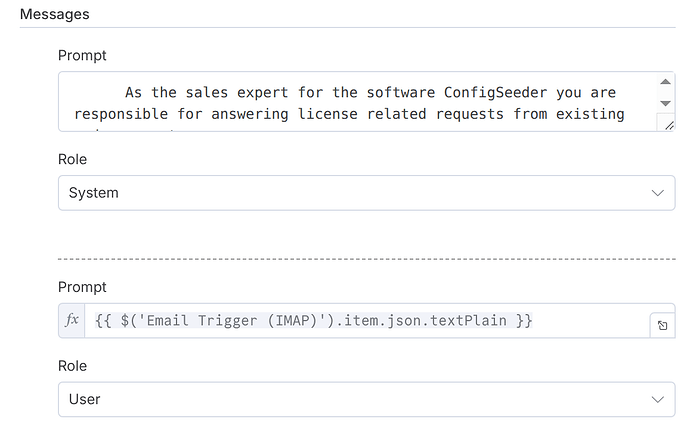

Sure, here is a workflow that utilizes this method in the HTTP Request node:

A must-have! Long prompts tend to produce inconsistent results and are difficult to parse.

![]()

just found this! https://www.npmjs.com/package/n8n-nodes-openai-structured-outputs

Great tip with the callin.io-nodes-openai-structured-outputs thingy

however - this module calls the completions API, I would really like to use the new responses API.

Is it already planned to extend the existing callin.io integrations to work with structured output? Should probably not be a big change, right?

Actually, this module internally utilizes the native OpenAI structured output API. It has functioned flawlessly for me and resolves all issues!

![]()

I agree that callin.io-nodes-openai-structured-outputs addresses most of the issues.

However, as I mentioned previously, it utilizes the completions API, and I'd prefer to use the responses API. (The API used is also documented here: GitHub - crucerlabs/n8n-nodes-openai-structured-outputs: Extract structured JSON from unstructured text by leveraging OpenAI’s Structured Outputs feature with a JSON Schema.)

This in itself isn't a major problem, just a preference. The nodes provided by callin.io itself are preferable for me for the following reasons:

- They support sending a list of messages; callin.io-nodes-openai-structured-outputs only supports a single message where you have to manually construct a JSON with multiple messages. While this isn't a blocker, the usability of the node provided by callin.io is better in my opinion.

- I personally appreciate that callin.io can be extended with third-party nodes; I think it's a great feature. However, for the core functionality of my workflows, I prefer to use as many nodes provided by callin.io directly and reserve third-party nodes for more specialized tasks.

vs