Hi All

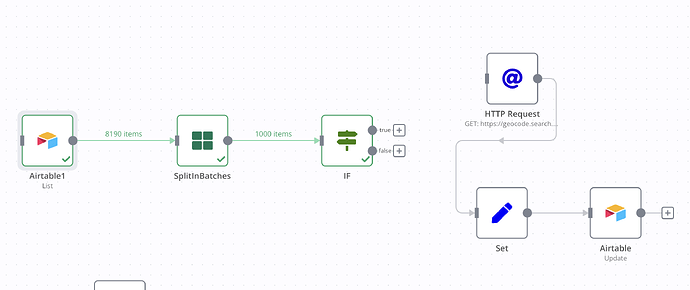

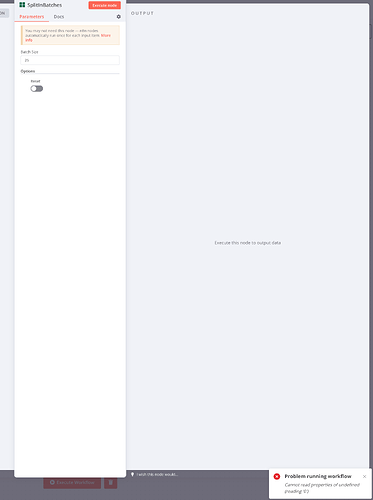

I would appreciate some guidance on the 'experimental' process I've set up, as shown in the image.

My objective is as follows:

-

Retrieve a table of unstructured addresses from an Airtable Base – THIS STEP IS SUCCESSFUL, retrieving just under 9000 fields. This corresponds to the first node in the image (Airtable1).

-

I need to send these addresses to a Geocoder (HERE.com) to get them back in a structured format. I've confirmed this works for a single field, but I want to avoid overwhelming their API with all 9000 records simultaneously. I was considering using a Batch node for this, but I'm unsure how to implement it correctly. My goal is to batch them into groups of 500 addresses for geocoding. This is the section marked 'experimental' in the process, involving nodes 2 and 3 (SplitinBatches & IF) in the image.

-

Following the batching, I plan to send the data to the Geocoder (Node 4 - HTTP Request). THIS STEP IS SUCCESSFUL AND CORRECTLY RETURNS MY ADDRESSES IN THE NEW STRUCTURED FORMAT.

-

Next, I set the fields using Node 5 (Set) – THIS STEP IS ALSO SUCCESSFUL.

-

Finally, I update Airtable with the new structured address data, linked to the original Airtable IDs – THIS IS SUCCESSFUL.

My primary challenge lies in point 2: effectively batching the Airtable list. I need to ensure that the process (1) iterates through all 9000 addresses until completion and (2) only processes addresses/Airtable IDs that haven't been processed yet.

It would be helpful to understand (1) what happens if an API failure occurs, perhaps due to exceeding HERE.com's API rate limits, and (2) how to handle subsequent runs. Will I need to restart the entire process? How can I ensure that only the unsuccessful records are reprocessed?

Any assistance or insights would be greatly appreciated.

PS I'm not sure how to share the actual callin.io workflow without revealing confidential information. If there's a more effective way to share it for assistance (compared to just the image), please let me know.

![]()

Many thanks

Stephen

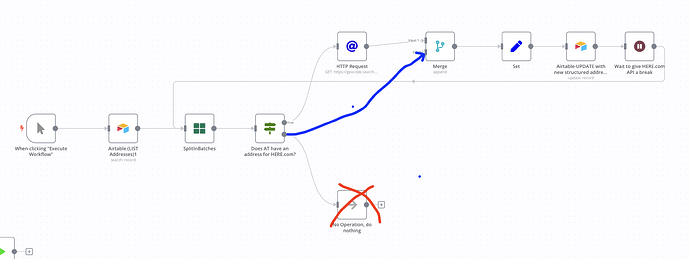

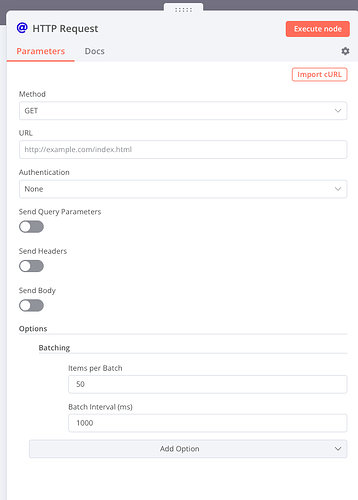

Hi, it seems you’re on a good path already. The only thing you’d need to add in order to loop through the rest of the items (and not just the first batch) would be to close your loop.

So something like this:

This of course uses dummy data and an IF condition that won’t work for your specific data structure, but the basic idea of this flow should still work for you.

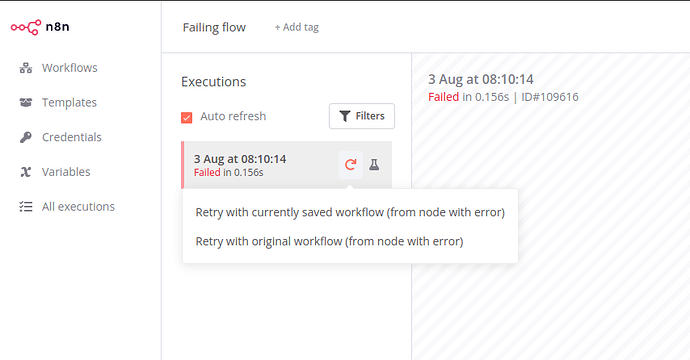

To avoid hitting rate limits you can reduce the batch size and increase the Wait time as needed. As for retrying, callin.io allows to restart a failed execution from the failed node onwards through the execution list. So you wouldn’t have to re-run everything:

Hope this helps

![]()

Many thanks. I’ve copied your flow and am going to take a look to see what I can learn.

Hi there!

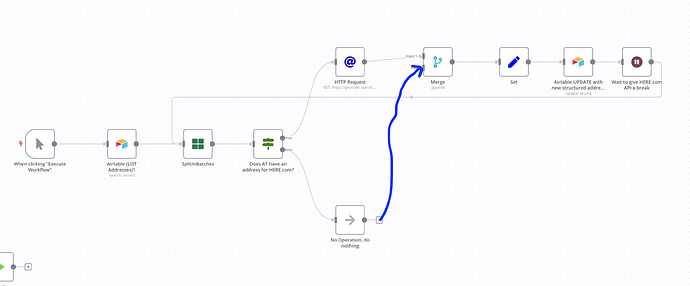

I've adapted your Workflow for my own needs, as shown in the attached image.

It appears to be functioning, but I'm curious about the exact purpose of the Merge node. Is it necessary in my current setup, or could I proceed directly to the Set Data node?

To clarify, my current Workflow proceeds as follows:

- It retrieves all Airtable Addresses (from the Address Field), totaling just under 9000 records.

- These records are then sent to a Batch process to be divided into chunks of 500.

- A check is performed to see if the Address Field is populated for any of these records (in retrospect, I might have placed this step earlier, after the Airtable List and before the Batch process).

3A. If no Address is found (FALSE), the process proceeds to 'DO NOTHING'.

3B. If an Address is found (TRUE), it is sent to the HTTP Request for the callin.io Geocoder (within the 500 batch).

- The data then moves to the MERGE node (again, I'm uncertain if this is required).

- Subsequently, a Set node is used to assign all the fields from the returned callin.io location data, such as Country, State, Postcode, and so on.

- Finally, it updates the corresponding Airtable fields with this new data.

- A 1-minute wait occurs before the process loops back to the BATCH process at step 2.

Thanks,

Stephen

It appears to be functioning, but my question is, what exactly is the Merge node accomplishing? Is it necessary for my situation, or can it directly proceed to Set Data?

Hi, I suspect this workflow might not operate correctly if all elements enter the false branch – the workflow would simply halt in that instance. Linking the false branch to the Merge node should guarantee that the looping persists even under these conditions.

Yep, that’s what I had in mind. You can also connect the NoOp node to the Merge node instead in case you want to keep it for workflow readability/documentation.

Yes, that's how I believe it should function. However, without knowing your specific data and callin.io version, it's difficult to confirm with absolute certainty. For reference, I tested my example using callin.io@1.1.1.

![]()

Thanks.

PS I've also started encountering an error recently, even though everything was functioning correctly before.

The error has suddenly appeared during the Batch process and it's now preventing it from running (despite working previously). The error message is: 'Problem running workflow

Cannot read properties of undefined (reading ‘0’)'. Please see the image below. I've even tried restarting the callin.io instance to resolve this, but without success.

Hi there. I wasn't even aware this was possible, but thank you for pointing it out. I'll give it a try.

This thread was automatically closed 90 days following the last response. New replies are no longer permitted.