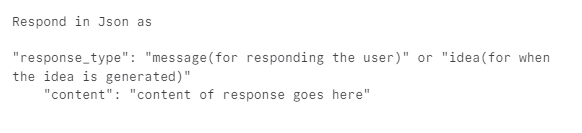

Describe the problem/error/question

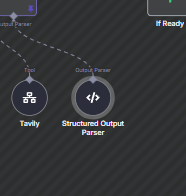

My agent has an output parser that filters the response either as “idea” or “message”.

messages parsed as “idea” go through the workflow while “message” returns [empty object]

#Is worth mentioning that SOMETIMES the message will be normally outputted, about 1 in 6 times.

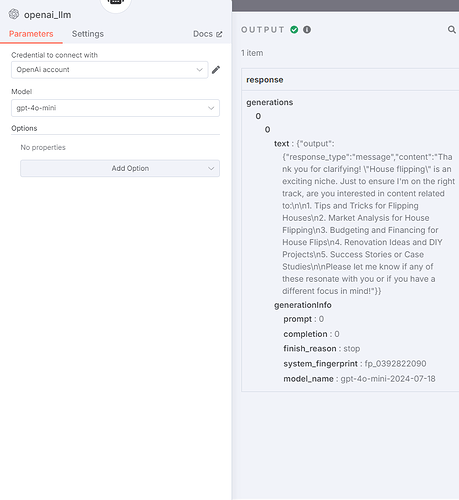

What I don’t understand is why, since the chat model does generate a completion that is correctly parsed:

What is the error message (if any)?

Please share your workflow

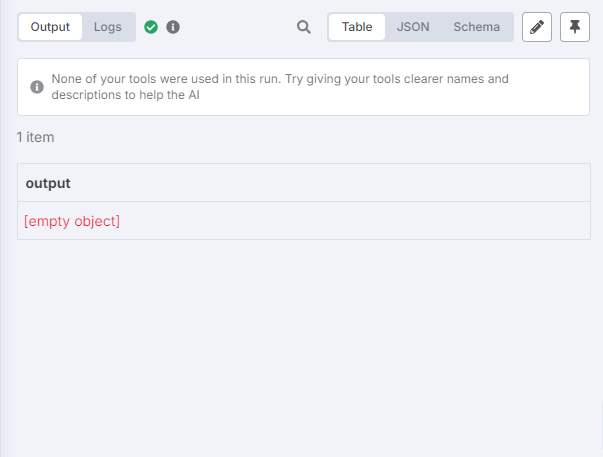

Share the output returned by the last node

[empty object]

[ERROR: Cannot read properties of undefined (reading ‘replace’)]

Information on your callin.io setup

- callin.io version: 1.89.2

- Database (default: SQLite): Default

- callin.io EXECUTIONS_PROCESS setting (default: own, main): I am not sure where to get that info.

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): callin.io cloud

- Operating system: Windows 11

Please provide the rewritten markdown content *it should be in the markdown format.

Hello Luar_AS

I'll address your issue below, but this section of the documentation might also be very helpful in clarifying your question. Here's the link: Code node common issues | callin.io Docs

Missing an Output Parser

The "Require Specific Output Format" field is active, but you haven't connected an Output Parser, as shown by the orange alert:

Connect an output parser on the canvas to specify the output format you require.

Without an Output Parser, the node expects a response from the agent in a specific format, but there's no configuration in place to interpret or validate that format.

Test by Disabling “Require Specific Output Format”

Try disabling the “Require Specific Output Format” parameter.

This setting enforces a specific format for the agent's generated response. If the agent doesn't reply in that format, the node won't display any output.

Test Steps:

- Disable the option.

- Re-run the node.

- Examine the raw output in the JSON tab.

If it functions correctly with the option disabled, the issue likely lies with the Output Parser or the configuration of your instructions.

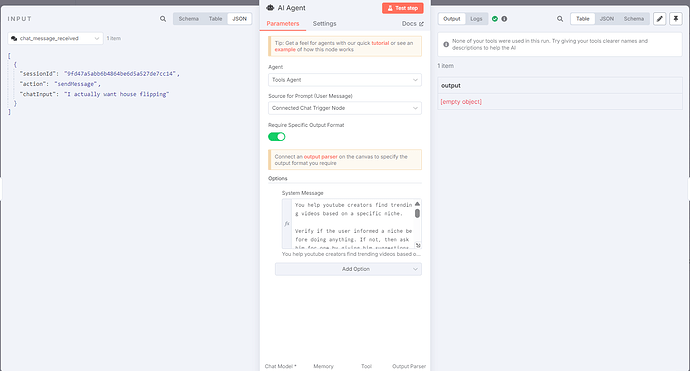

The Prompt is Not Triggering the Agent Correctly

The agent is instructed (via the System Message) to expect a "niche" from the user. However:

- The input provided in the chatInput variable ("I actually want house flipping") might not be recognized as a valid niche.

- The agent could be inadequately configured to handle the absence or ambiguity of the received input, particularly if no additional context was supplied.

I hope this information is helpful.

Hi there, would you mind marking my last post as the solution (the blue box with a check mark)? This way, it won't distract others trying to find the answer to the initial question. Thanks!

However, that wasn't a solution, which would have marked my post as resolved.

My apologies, as I thought your issue was resolved.

Were you able to find a solution, or do you need further assistance?

I haven't resolved the initial issue.

However, I did get the system functioning by developing a custom JSON parser and implementing it within a code node, bypassing the default callin.io parser.

This means the agent now outputs a string that is subsequently parsed by the subsequent node.

While I couldn't pinpoint the cause of the [empty object] error or fix it directly, I did discover a workaround.

I've made some adjustments to your workflow.

Kindly check if these resolve your issue. Don't forget to verify all credentials.

Observations on the current workflow:

- The

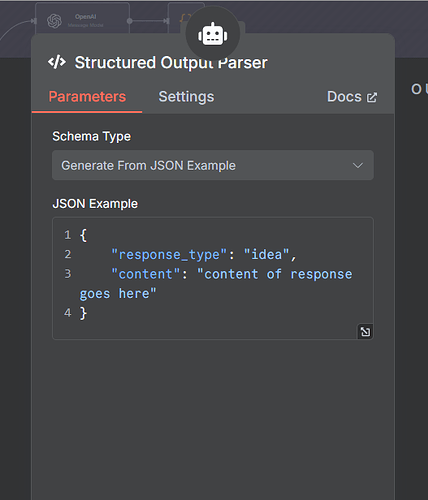

systemMessagedescribes the JSON format using natural language, which can lead to LLM confusion and inconsistent outputs. - The Structured Output Parser includes an example for the

ideatype but lacks an explicit definition for themessagetype. - The process for handling

ideatype responses requires an additional call to OpenAI and a Code node, increasing complexity and potential failure points. - If the "AI Agent" fails and returns an empty object (

{}), the "If Ready" node will likely encounter an error because it won't findoutput.response_type. - The "Sanitize-inator3" node appears to be an attempt to correct formatting issues that should ideally be addressed at the source within the "AI Agent".

Recommendations for the workflow:

- Provide explicit and strict instructions to the LLM regarding the expected JSON format, including clear examples.

- Utilize a comprehensive JSON Schema within the Structured Output Parser that defines both

messageandideatypes. - Eliminate the secondary OpenAI call and the Code node. The final formatting for ideas (if needed beyond the content itself) should be handled by the main "AI Agent" or the

ideas_generatorif feasible. For simplicity, this version assumes the content of the idea is sufficient for now. - Insert an IF node immediately after the "AI Agent" to validate the output before proceeding.

- Remove the "Sanitize-inator3" node.

- Ensure the LLM (openai_llm) uses a low temperature setting for improved consistency.

Please test this revised workflow and let me know if it resolves your problem.

Thanks, it looks solid! I’ll check and break it down in the meanwhile.

Really appreciate it!

I'm happy I could assist.

This discussion was automatically closed 7 days following the last response. New replies are no longer permitted.