Hello everyone,

I'm encountering a problem with the AI Agent node in callin.io. It functions correctly with Groq, but it's not working with OpenRouter, OpenAI, or Google providers. Interestingly, the Basic LLM Chain node operates without issues across all these providers (OpenRouter, OpenAI, Google, and Groq).

I'm running callin.io on an Ubuntu server within a Docker container, accessible via my own domain. My suspicion is that this might be related to function calling support in the models, but I'm uncertain about the resolution. Has anyone else experienced this or have suggestions for troubleshooting? Any guidance on model selection, configuration, or debugging would be greatly appreciated. Please let me know if further details about my setup or versions are needed.

Thanks! Video problem VIDEO ERROR

- callin.io version: 1.80.3

- Database (default: SQLite): Postgress (local)

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): Docker

- Operating system: Ubuntu last

It seems your topic is missing some crucial details. Could you please provide the following information, if relevant?

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

Please share these details to help us understand the issue better.

Hi, could you please tell us:

- Which specific model versions are you utilizing with each provider?

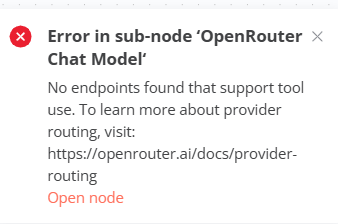

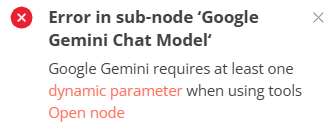

- What error messages are displayed when you attempt to use non-Groq providers?

The models you are currently utilizing possess different levels of function calling capabilities, which is essential for the AI Agent node:

- OpenRouter model considerations:

- qwen2.5-vl-72b: This vision-language model exhibits inconsistent support for function calling.

- dolphin3.0-mistral-24b: This model necessitates a specific function calling format.

- llama-3.3-70b-instruct: This model should function correctly, but might require particular settings.

Consider these alternatives for debugging purposes:

4. anthropic/claude-3-opus:function-calling

5. anthropic/claude-3-sonnet:function-calling

6. openai/gpt-4o:free

Thanks! I switched to GPT-4o-mini, and the tool began functioning. I'll explore alternative tools on OpenRouter. It's peculiar, though, why meta-llama/llama-3.3-70b-instruct:free isn't working on OpenRouter when it functions correctly on Groq.

- Have you reviewed the OpenRouter configuration settings regarding variations in function calling format?

The difference you're seeing between Groq and OpenRouter for the same model (llama-3.3-70b-instruct) probably stems from how each service handles function calling:

- Implementation variations:

- Groq might have integrated custom function calling wrappers around Llama 3.3.

- OpenRouter could be employing a more direct implementation without these additions.

- Functional models for the AI Agent node:

- Continue using GPT-4o-mini as it's working for your setup.

-

For alternatives via OpenRouter, consider trying:

- anthropic/claude-3-haiku:function-calling (more cost-effective than opus/sonnet)

- mistralai/mistral-large:function-calling

The callin.io AI Agent node needs reliable function calling support that adheres to OpenAI’s implementation standards. Not all model providers achieve this, even when using the identical base model.

If this response resolved your issue, please consider marking it as the solution! A like would be greatly appreciated if you found it helpful!

![]()

![]()

This discussion was automatically closed 90 days following the last response. New responses are no longer permitted.