Describe the problem/error/question

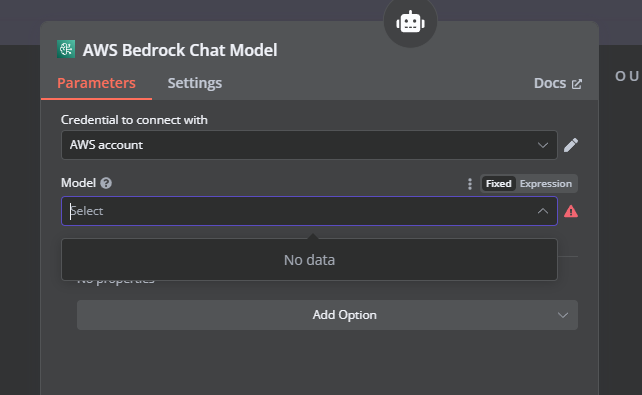

I'm attempting to utilize the callin.io AI Agent with an AWS Bedrock model, but I'm encountering an issue where the models are not loading:

What is the error message (if any)?

There are no error messages.

Please share your workflow

Share the output returned by the last node

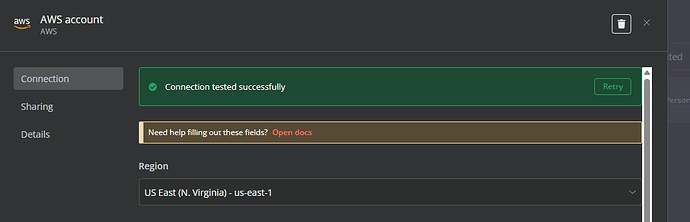

My credentials appear to be correct:

And I have full Bedrock access with these keys.

Information on your n8n setup

- callin.io version: 1.82.3

- Database (default: SQLite): default

- callin.io EXECUTIONS_PROCESS setting (default: own, main): default

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): npx

- Operating system: Windows 11

Hey, AWS is my thing

![]()

Check this out — if your credentials are all good and you’ve got full access to Bedrock, the issue might be one of these:

Have you tried this?

-

Activate the models in the AWS Bedrock Console

Even with full access, some models (like Claude, Jurassic, Titan, etc.) need to be manually activated per account. Go to the Bedrock Console and make sure the models are enabled for use. -

Double-check IAM permissions

Sometimes “full access” doesn’t include everything the SDK needs. Make sure your user or role has the following permissions:bedrock:ListFoundationModelsbedrock:InvokeModelbedrock:InvokeModelWithResponseStream

-

Confirm the correct region in callin.io

us-east-1is the right region for Bedrock, but if there’s any mismatch between the region in your AWS setup and the callin.io node, it might fail to load the models. -

Reload the model dropdown

Occasionally, the dropdown just freezes. Try reopening the workflow, refreshing the browser tab, or even restarting callin.io (especially if you’re running it locally or vianpx).

If everything on the AWS side looks good, this might be a bug in callin.io. I’ve seen a few reports recently with Bedrock integration issues in newer node versions.

If you want, I can send you a quick example using the HTTP node to call Bedrock directly while the bug gets sorted out.

Happy to dig deeper with you if needed.

– Dandy

Hello Dandy

i spent the last week trying this, and everything appears to be okay:

1 - The model is activated, and the account has access to those models (I'm using Claude Sonnet 3.5).

2 - Initially, I was using full access to the model and then switched to granular singular permissions.

3 - Yes, same region.

4 - I was using it via Docker and thought it might be a port or network isolation issue, so I switched to npx.

Unfortunately, with no success. I moved to Ollama, and it worked just fine. My only problem is with Bedrock, and I'd like to use that.

![]()

I'm available to investigate further together.

![]()

Okay, let's dive into this. If all your configurations are correct (model enabled, proper permissions, correct region, no network restrictions), the issue might be specific to how callin.io interacts with Bedrock's SDK, particularly with Claude 3.5.

Here's a step that could help us narrow down the problem:

Try invoking the Bedrock API directly using an HTTP Request Node, separate from the AI Agent workflow. This will help us determine if the problem lies with the Agent node itself or with the API access.

Here's a basic example of the call:

URL: https://bedrock-runtime.us-east-1.amazonaws.com/model/anthropic.claude-3-sonnet-20240229/invoke

Headers:

json

{

“Content-Type”: “application/json”,

“X-Amz-Target”: “AmazonBedrockRuntime.InvokeModel”,

“Authorization”: “Bearer YOUR_TOKEN_HERE”

}

Body:

json

{

“prompt”: “You are a helpful assistant.”,

“max_tokens_to_sample”: 300

}

If this direct call via the HTTP node is successful, it suggests the problem is with the Agent node or its model parsing. If it still fails, it might indicate a deeper issue on the AWS side.

Please let me know if this approach yields any results.

This thread was automatically closed 90 days following the last response. New replies are no longer permitted.