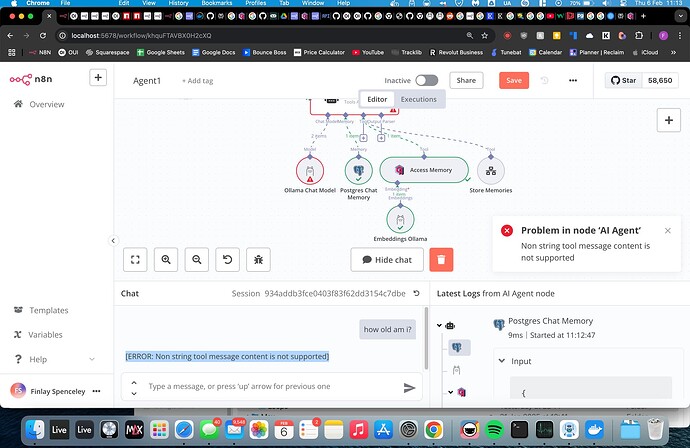

I've been encountering an issue for a while now. This seems to occur with all ollama models but not with OpenAI. Is there a specific option I need to configure? This problem makes using tools within agents/ollama unusable.

Heres the workflow `` {

"nodes": [

{

"parameters": {

"promptType": "define",

"text": "=Date: {{ $now }}

User Prompt: {{ $json.chatInput }}",

"hasOutputParser": true,

"options": {

"systemMessage": "You have access to two long term memory tools (different to the conversation history), you can store and retrieve long term memories. always retrieve memories from the qdrant vector store (Access Memory Tool) to see if they add any context that could be useful in aiding you to respond to the users query.

IMPORTANT: Do not create long term memories on every user prompt, always determine if its something worth remembering.

Create a long term memory using the memory tool whenever you believe that something the user said is worth remembering, for example 'i don't like cheese' or 'i'm size 8 shoes' - these could be useful in later conversations. DO NOT USE THIS TOOL ON EVERY USER PROMPT, only store memories worth remembering."

}

},

"type": "@n8n/n8n-nodes-langchain.agent",

"typeVersion": 1.7,

"position": [

220,

0

],

"id": "a098d361-14d7-4b08-8a00-7dce7882c589",

"name": "AI Agent"

},

{

"parameters": {

"model": "command-r7b:latest",

"options": {}

},

"type": "@n8n/n8n-nodes-langchain.lmChatOllama",

"typeVersion": 1,

"position": [

120,

260

],

"id": "ccdb57cd-fc92-4c07-87d2-08047a172429",

"name": "Ollama Chat Model",

"credentials": {

"ollamaApi": {

"id": "OyXUCOXv8zh5NSmM",

"name": "Ollama account"

}

}

},

{

"parameters": {

"tableName": "n8ntestchats"

},

"type": "@n8n/n8n-nodes-langchain.memoryPostgresChat",

"typeVersion": 1.3,

"position": [

280,

260

],

"id": "922fdd15-a5b0-49fa-8902-fa26274e4f48",

"name": "Postgres Chat Memory",

"credentials": {

"postgres": {

"id": "CCxoJS7PuMPUDtxT",

"name": "Postgres account"

}

}

},

{

"parameters": {

"options": {}

},

"type": "@n8n/n8n-nodes-langchain.chatTrigger",

"typeVersion": 1.1,

"position": [

-120,

0

],

"id": "f16fdac4-1c53-4bda-a680-3f775b2caecb",

"name": "When chat message received",

"webhookId": "e250c0ef-9983-4f43-9fbe-0bce74d9c403"

},

{

"parameters": {

"model": "mxbai-embed-large:latest"

},

"type": "@n8n/n8n-nodes-langchain.embeddingsOllama",

"typeVersion": 1,

"position": [

440,

360

],

"id": "3ea0cf81-9565-4bd4-b9e9-c84f1eab9f74",

"name": "Embeddings Ollama",

"credentials": {

"ollamaApi": {

"id": "OyXUCOXv8zh5NSmM",

"name": "Ollama account"

}

}

},

{

"parameters": {

"name": "storeMemories",

"description": "Call this tool whenever the user discloses or provides information about themselves you thin should be remembered long term. Call this tool whenever you feel storing a memory would aid and assist in future conversations where the conversation memory will have been forgotten. Input the memory in the memory field, and the memory topic in the memory topic field",

"workflowId": {

"_rl": true,

"value": "x4dxqhsUH07d8Ht9",

"mode": "list",

"cachedResultName": "Memory Store"

},

"workflowInputs": {

"mappingMode": "defineBelow",

"value": {

"memory": "={{ $fromai('memorytostore') }}",

"memoryTopic": "={{ $fromai('topicofmemory') }}"

},

"matchingColumns": [],

"schema": [

{

"id": "memory",

"displayName": "memory",

"required": false,

"defaultMatch": false,

"display": true,

"canBeUsedToMatch": true,

"type": "string"

},

{

"id": "memoryTopic",

"displayName": "memoryTopic",

"required": false,

"defaultMatch": false,

"display": true,

"canBeUsedToMatch": true,

"type": "string"

}

],

"attemptToConvertTypes": false,

"convertFieldsToString": false

}

},

"type": "@n8n/n8n-nodes-langchain.toolWorkflow",

"typeVersion": 2,

"position": [

700,

220

],

"id": "c76f26c8-b4ca-432d-b048-23c95bdd3cb6",

"name": "Store Memories"

},

{

"parameters": {

"mode": "retrieve-as-tool",

"toolName": "userlongtermmemory",

"toolDescription": "This tool allows you to access memories you have created about the user. Call it in every chat, and if relevant, use your memories about the user to tailor your response.

Always output as string.",

"qdrantCollection": {

"_rl": true,

"value": "memories",

"mode": "list",

"cachedResultName": "memories"

},

"includeDocumentMetadata": false,

"options": {}

},

"type": "@n8n/n8n-nodes-langchain.vectorStoreQdrant",

"typeVersion": 1,

"position": [

420,

220

],

"id": "ec643690-b571-4ca8-bd17-aa10fc6e1a0f",

"name": "Access Memory",

"credentials": {

"qdrantApi": {

"id": "jgWIiGVLBrPh9fcY",

"name": "QdrantApi account"

}

}

}

],

"connections": {

"Ollama Chat Model": {

"ailanguageModel": [

[

{

"node": "AI Agent",

"type": "ailanguageModel",

"index": 0

}

]

]

},

"Postgres Chat Memory": {

"aimemory": [

[

{

"node": "AI Agent",

"type": "aimemory",

"index": 0

}

]

]

},

"When chat message received": {

"main": [

[

{

"node": "AI Agent",

"type": "main",

"index": 0

}

]

]

},

"Embeddings Ollama": {

"aiembedding": [

[

{

"node": "Access Memory",

"type": "aiembedding",

"index": 0

}

]

]

},

"Store Memories": {

"aitool": [

[

{

"node": "AI Agent",

"type": "aitool",

"index": 0

}

]

]

},

"Access Memory": {

"aitool": [

[

{

"node": "AI Agent",

"type": "ai_tool",

"index": 0

}

]

]

}

},

"pinData": {},

"meta": {

"templateCredsSetupCompleted": true,

"instanceId": "558d88703fb65b2d0e44613bc35916258b0f0bf983c5d4730c00c424b77ca36a"

}

}

``

Information on your callin.io setup

- 1.76.3

- Database (default: SQLite):

- deafult:

- Running callin.io via Docker

- MacOS Sonoma 14.7

It appears your topic is missing some crucial details. Could you please provide the following information, if relevant?

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

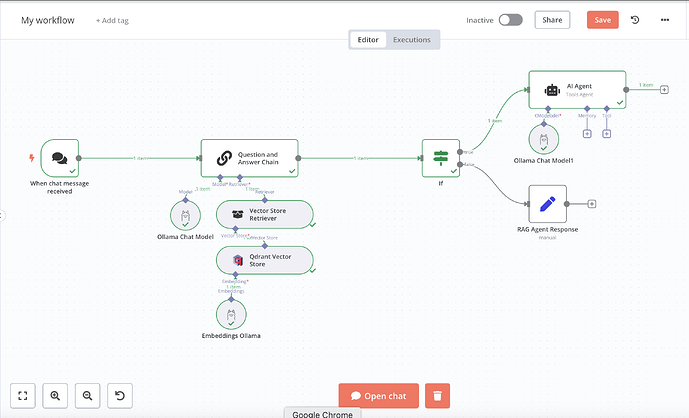

I haven't quite figured this out yet, unfortunately. My current workaround involves placing an 'ask questions about documents' node at the very start. If this node returns 'I don't know.', the conversation is then routed to another agent. This approach allows me to still ask questions about my vector store, but it significantly restricts my options when I need to invoke more than one tool/node. However, this seems to resolve the 'tool returned non-string' error, which suggests that Ollama can indeed process the returned data.

I'm considering that the most effective approach for now might be to develop an external workflow to manage document retrieval, including some JavaScript cleanup at the end. I haven't tested this yet, and it's not an ideal solution as it lengthens the overall process and consumes more computing resources.

I'm encountering a similar problem with Groq when using Llama. Please share if you discover a solution.

I'm experiencing this error as well.

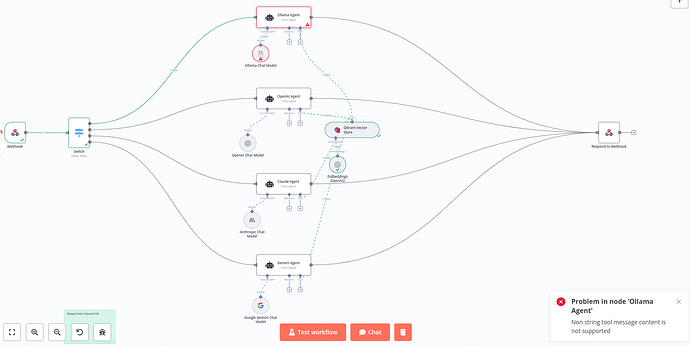

I can confirm this is an Ollama issue related to Qdrant. I recently set up something similar and encountered the same error, which is quite frustrating. The workaround provided by Finlay is suitable for the time being.

I'm encountering the identical problem and would be grateful if you could share a screenshot of your resolution!

Here’s an example workflow I’ve put together to illustrate the concept. It has some limitations but functions for now.

Here’s the prompt for the question and answer chain RAG agent:

*You are a document retrieval agent with RAG capabilities.

RULES:

Search the qdrant database with the user query; if you can find information related to the query, answer the question.

IMPORTANT: If you cannot find any information relevant to the user query, respond exactly with ‘I don’t know’ - in that exact format, without any additional words or context - just explicitly write ‘I don’t know’

Example Interaction 1:

User: What does the gen~ object do in Max?

(Search Vector Database)

— Vector search returns no relevant results

AI: I don’t know

Example Interaction 2:

User: What’s the company policy for leaving early?

(Search Vector Database)

---- Vector Search returns relevant results

AI: The company policy on leaving early states that you must notify your line manager at least 1 hour before leaving early; if you don’t, you may be subject to an internal investigation.*

The If node checks if the response is ‘I don’t know’. If it is, the request is sent to the agent; otherwise, it returns the response from the question and answer chain.

Hi,

I encountered a similar problem with Qdrant and Ollama.

I managed to resolve it by setting up a sub-workflow. This workflow incorporates the Qdrant Vector Store node configured with the get many operation mode. For the prompt, I passed the user's query directly using {{ $json.query }}. Subsequently, I transformed the output into text using a Code node with the JSON.stringify function.

edit:

This approach is compatible with AI agents and multiple tools. In my setup, I retrieve data and then pass it to the subsequent tool via the AI agent.

My current solution involves utilizing the OpenAI Chat Model and configuring the base URL to point to my local ollama server at http://localhost:11434/v1 within my credentials.

Hello,

I’m encountering the same problem. I attempted the workaround suggested, but the error continues to occur.

Could you share more details about your subflow?

The sub workflow is structured as follows:

The Workflow utilizes the chatInput for the prompt:

The code node is configured as follows:

for (const item of $input.all()) {

const new_item = {

'sql_query': item.json.document.metadata.sql_query,

'response_format': item.json.document.metadata.response_format,

}

const jsonString = JSON.stringify(new_item);

item.json.text_string = jsonString;

}

return $input.all();

Which LLM model are you using? When I try it with the OpenAI chat model node, it responds the same. Are there any special startup/environment settings for Ollama?

Appreciate your assistance. I'm still encountering the same problem. I'll await a resolution.