Describe the problem/error/question

Hi, I'm looking for some assistance with a problem.

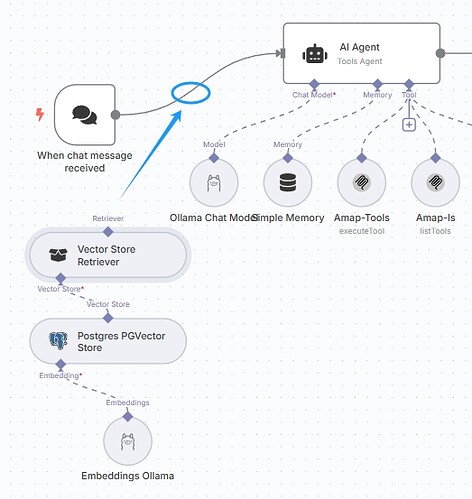

How can I insert a vector store retriever module between the “chat message received” trigger and the “AI agent” node?

When constructing an AI Agent flow, I need to call some MCP services. Prior to that, I need to perform some information augmentation, similar to RAG (specifically, adding it to the context, not based on LLM output).

Is there a method to integrate the Vector Store Retriever before the AI Agent? Alternatively, is there a way to prevent the LLM module from generating output? Thank you for your guidance.

Information on your n8n setup

- n8n version:1.93.0

- Running n8n via (Docker, npm, n8n cloud, desktop app):docker

Thanks for your help.

![]()

You can explore other available options, but you'll find the aforementioned by clicking the Plus (add node) button → AI → Other AI Nodes → Vector Stores.

This thread was automatically closed 7 days following the last response. New replies are no longer permitted.