Hello,

I've searched the forum but couldn't find an answer to this topic.

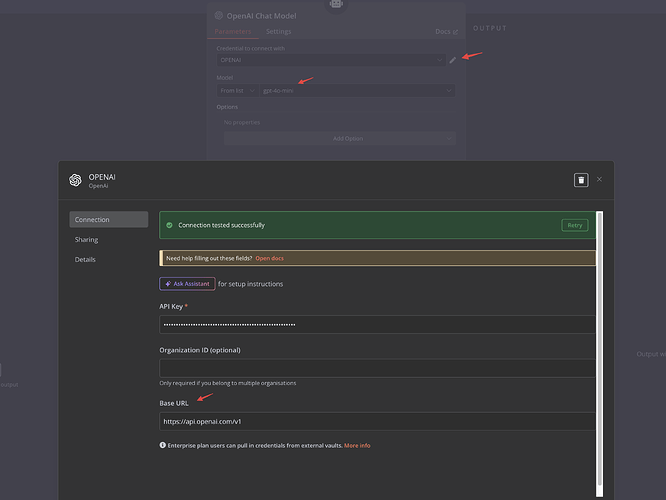

I'm working within an offline network and need to connect a model via an HTTP gateway I've set up to establish communication with a language model.

Is this feasible? Currently, it appears I only have access to existing models, and an API is required for them (and again, I'm operating on an offline network).

Thank you very much.

This is an image of someone who asked a similar question.

Information on your callin.io setup

- callin.io version: latest

- Database (default: SQLite): SQLite

- callin.io EXECUTIONS_PROCESS setting (default: own, main): own

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): Docker

- Operating system: Windows

Hi jksr, thank you very much for the quick response.

I don’t have an api_key because I’m working with a model that I uploaded to HTTP and I want to access it.

When I enter a fake api_key like XXXXXXXXXXXXXX and I enter the HTTP URL of my model (which works with HTTP triggers), I get “Couldn’t connect with these settings”

What do you mean by uploaded to HTTP? Is the model operating on your own server? By HTTP trigger, are you referring to the node?

If possible, I recommend configuring an API key; otherwise, it's essentially accessible to everyone.

Hi, yes my model is hosted on a server I built, and when I create an HTTP node, I can successfully interact with it and receive a response.

I'm looking to connect this model to the LLM model or AI Agent node within callin.io.

Regarding the API key, it's not necessary in this case because the model resides on my private server, and only I initiate contact with it.

Is it feasible to link my model to the AI Agent or LLM MODEL node in callin.io?

This is achievable if your self-hosted model is compatible with the OpenAI API protocol. You can find more information here: Quickstart — vLLM

My model body appears different from OpenAI's ![]()

Could someone please clarify?

Does your LLM function by sending an API request and receiving a response? If so, what is the purpose of an AI node?

Perhaps it would be simpler to construct a sub-workflow that includes an input parameter, a set node for the prompt (which could also be a parameter), and an output webhook to capture the reply?

Yes, I created an http node, but I suppose there are advantages to creating a Chat model node because I believe the communication is more continuous there compared to a single http call.

I believe communication remains consistent. The AI node simply simplifies the configuration and integration of tools, API endpoints, models, and more. I'm currently utilizing a custom node for Google's experimental models, which require specific configurations for the request body, and it functions perfectly.

This thread was automatically closed 90 days following the last response. New replies are no longer permitted.