Scenario:

- I am working with callin.io to automate a workflow that involves processing a large volume of data.

- My workflow involves reading 100 rows of data from an upstream database.

- Each row of data needs to be processed by making an API call.

- The result from the API call is then written back to the database.

Requirement:

- I want to optimize the workflow to execute API calls and database writes concurrently.

- Specifically, I am aiming for 10 concurrent executions to improve efficiency and reduce processing time.

It appears your topic is missing some crucial details. Could you please provide the following information, if relevant?

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

Welcome to the community!

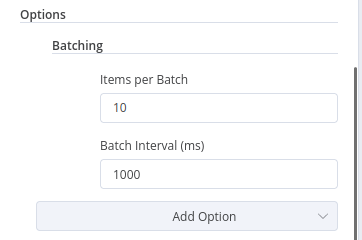

That is possible with the HTTP Request node by adding the option “Batching”:

Hi there,

If your requirement is confined to API requests, the advice provided earlier will work perfectly. However, for greater flexibility, I recommend using RabbitMQ. After obtaining the data, your flow can process it by pushing messages (items) to a queue and then processing them in a separate workflow using a RabbitMQ trigger. This approach allows you to combine optimal options for your specific case, such as limiting parallel processing to X instances and acknowledging messages upon flow completion, etc.

Hope this helps.

My typical workflow involves receiving tasks via HTTP from an upstream source, executing data integration processing, and then requiring a downstream LLM to manage approximately 5 concurrent processes. I haven't observed any concurrency settings within the Basic LLM Chain or OpenAI Chat Model configurations.

The previous suggestion regarding concurrency management via RabbitMQ is intriguing. Are there particular examples available for this, and how can distinct tasks be restricted to utilize varying concurrency levels? This method would also necessitate the integration of supplementary external components. Is callin.io planning to introduce concurrency management features in the future? This would be highly beneficial for numerous application scenarios, such as API calls (for instance, to LLMs).

Is this approach comparable to initiating a workflow via a webhook?

This thread was automatically closed 90 days following the last response. New replies are no longer permitted.