Hi,

I'm working with a basic RAG workflow that utilizes Supabase. Everything functions correctly, except when I pose a multipart question, such as "How do I contact you and what time is X on?"

This type of query results in an error within the Supabase vector store. I've attempted to split the chatInput into multiple parts within the system prompt, but this doesn't consistently resolve the issue. Is there a way to configure the workflow to answer all parts of a multipart question?

- Add a Function Node before your vector search

This node will segment the query into distinct parts:

const input = $json.chatInput;

const parts = input.split(/and|&|then|also|,/i).map(p => p.trim()).filter(Boolean);

return parts.map(p => ({ question: p }));

- Iterate through each segment using an

Item Lists → Split Outnode or aSplit In Batchesnode. - Execute vector search for each sub-query. Link the output of the Function node to your Supabase vector search.

- Gather and consolidate all responses using a

Mergenode (Combine mode: Append) or combine them manually in a finalFunctionnode. - Optional: Pass the consolidated response to a Chat Model node for a polished, natural-sounding answer:

This approach prevents vector store failures caused by improperly formatted or ambiguous multi-intent queries, ensuring the user receives a cohesive, multi-part answer.

Thanks! However, I don't believe this will work because you cannot insert a function node before the vector store. The vector store functions as a tool attached to the AI agent.

I've defined this within the AI Agent's prompt, and it appears to handle the initial part of the query adequately. Ideally, perhaps I should place something before the AI Agent to enable it to be called with multiple inputs.

{

"userMessage": "{{(() => {

const raw = $json.chatInput || $json.body?.question || $json?.input || '';

if (typeof raw !== 'string') return 'Fallback query';

const parts = raw.split(/[.?!]s+/).filter(p => p.length > 10);

return parts[0] || raw;

})()}}"

}

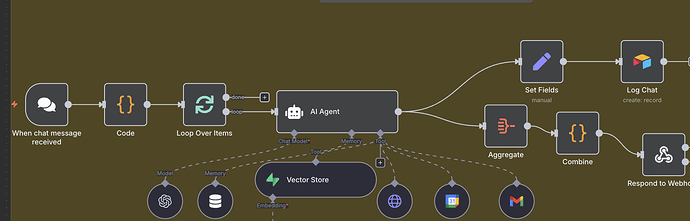

- Divide the user’s query into segments before the AI Agent

- Insert a Function node directly after your trigger with the following code:

const raw = $json.chatInput || '';

const parts = raw

.split(/[.?!]s+/)

.map(p => p.trim())

.filter(p => p);

return parts.map(p => ({ chatInput: p }));

- This will produce one item for each segment of the question, each within its own

$json.chatInput.

- Utilize a SplitInBatches node

- Link the Function node to a SplitInBatches node (set Batch Size to 1).

- This configuration ensures the AI Agent processes each segment individually.

- Connect the AI Agent

- Feed the output from SplitInBatches → AI Agent (with your Supabase vector-store tool activated).

- The agent will subsequently invoke the vector store tool for each segment separately.

- Consolidate the individual responses

-

Following the AI Agent, add a Merge node:

- Mode: Combine

- Output Type: Append

- This action gathers all responses from each segment into a single array.

- (Optional) Structure the final reply

- Input the merged array into a Chat Model node with a prompt similar to this:

Here are the answers to each part of your question:

{{ $json.responses.join('n') }}

- Alternatively, use another Function node for concatenation.

This approach ensures that each sub-question is processed by the vector store tool independently, thereby preventing multi-intent errors.

This thread was automatically closed 7 days following the last response. New replies are no longer permitted.