How can I retrieve this information? It's visible in the chat model, but I'm unable to access this data within my workflow.

I've encountered workarounds within the community, but perhaps there's now an official method?

Information on your callin.io setup

- callin.io version: 1.84.3

- Database (default: SQLite): default

- callin.io EXECUTIONS_PROCESS setting (default: own, main): main

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): docker

- Operating system: Ubuntu 24.04

EDIT: Solution Found

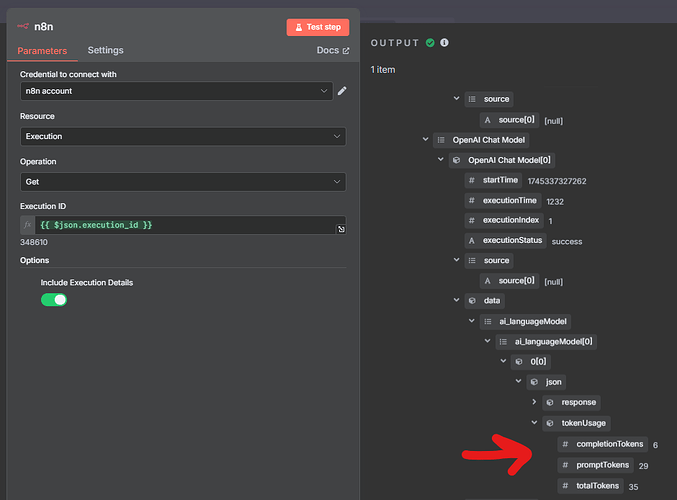

You can retrieve the execution data as a workaround and get the token usage information directly from the LLM model node.

I copied this workflow as-is into the same version (1.84.3) of callin.io (community) and my output doesn’t have that part (tokenUsage) at all. Is there something you have enabled in your settings for your actual OpenAI account to make that appear in the response?

The information resides within the OpenAI Chat Model node, not the AI Agent node.

Did you check that specific node?

That's precisely the issue. The data isn't available in the AI Agent's output, and I'm unsure how to access it from the chat model node.

![]()

Ah, okay. Thanks for the clarification. It's a bit more frustrating when you know something exists but you're unable to access it.

For those looking to try this out or build upon the concept to see if it can be made to function, this appeared promising (a document was inserted), but the actual information was not retained.

Does the agent have access to the subnode token usage data? If you inquire, will it respond?

I'm not sure. I just thought it would be worth exploring. I would expect that if anything within the workflow has access to the tokenUsage information from the model, it would be the agent.

However, it would be an unusual interaction. What I attempted would essentially involve instructing the AI to return tool-usage instructions to the agent (in some manner) with sufficient comprehension of callin.io internals, the MongoDB client tool, and itself, to create a document, populate its data, and insert it. So, it "understood" some of the MongoDB aspects, but I was merely speculating on whether it would "understand" enough to inform the agent how to retrieve the tokenUsage statistics. It was likely a fruitless effort.

Furthermore, incorporating that into the System Message results in another "tool usage" call to the AI, consuming additional tokens, thus observing it changes it.

Alguem ja tentou fazer a consulta por api direto no site da openai.com?

Hi there!

Is this what you were looking for?

Please provide the rewritten markdown content *it should be in the markdown format.

Hi

I'm confident you can retrieve this information from the underlying tables.

Here's a workflow that was recently shared, which is likely based on that approach:

Reg,

J.

Unfortunately, that's an estimator, not actual data extraction from the workflow.

From what I can see, it retrieves the text, the AI model, and sends it to an external service to estimate the cost. Very clever, but still a bit cumbersome.

Just wondering, what's the practical application for this? Is the idea to handle expenses for unusual cases?

Hi,

Potential use cases for a solution like this:

- Cost management (internal/client-facing)

- Security and abuse mitigation.

- Alerting: similar to the above points.

- Performance / debugging / monitoring: Analyzing response time per workflow versus tokens utilized.

- Unified solution: I was previously exploring OpenAI stats to integrate into a Grafana dashboard, but this approach could encompass multiple model providers.

I'm confident there are even more possibilities.

I operate callin.io as the backend for a SaaS initiative. When our users engage with our agents, I aim to record the token consumption to deduct it from their token balance.

Currently, the interim approach involves utilizing the OpenAI nodes rather than the AI Agent node.

However, we will soon require the AI Agent node as well.