Describe the problem/error/question

I'm quite new to using callin.io and LangChain (or any post-processing text tools for LLMs). I'm building a Telegram bot and I want that after I receive an answer, I could say something like, “Can you post it on my blog?” If I type a text like that, it should ask me something like, “What would be the title?” And then post it on my WordPress. If not, it should continue our conversation.

I've managed to get answers from OpenAI and post them via WordPress, as shown in the workflow I'll share later, but I'm not sure what exactly needs to be changed. I suspect I need to modify something in the Output parser, but I'm not entirely certain. Has anyone built anything similar?

Thank you.

What is the error message (if any)?

N/A

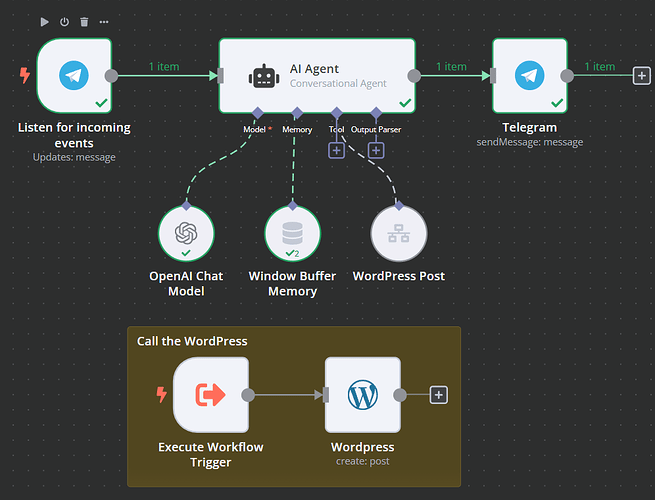

My workflow

Share the output returned by the last node

Information on your callin.io setup

- callin.io version: Cloud

- Database (default: SQLite): Cloud

- callin.io EXECUTIONS_PROCESS setting (default: own, main): Cloud

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): Cloud

- Operating system: Cloud

Please provide the rewritten markdown content *it should be in the markdown format.

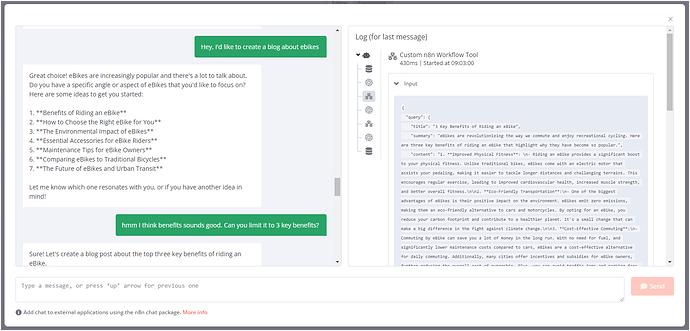

I was thinking of something like this:

I was inspired by this example:

Now, I have a couple of problems:

- The WordPress section is never triggered. I don’t know why, I almost copied the same example with just minor changes like the general message:

Call this tool whenever the user requests to publish something on their blog/WordPress. Please send the user request for the post as an inline query string.

- It seems the

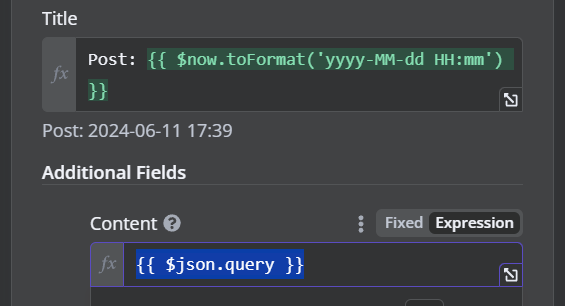

$json.queryis always empty:

Or I don’t know how to access it. I also tried: {{ $json.output }} and got the same result.

- I don’t know how to reply to the user that the post has been created with a friendly message. Should I call the OpenAI message again in some way? Or what would be the best approach?

Any ideas what am I doing wrong? Thank you.

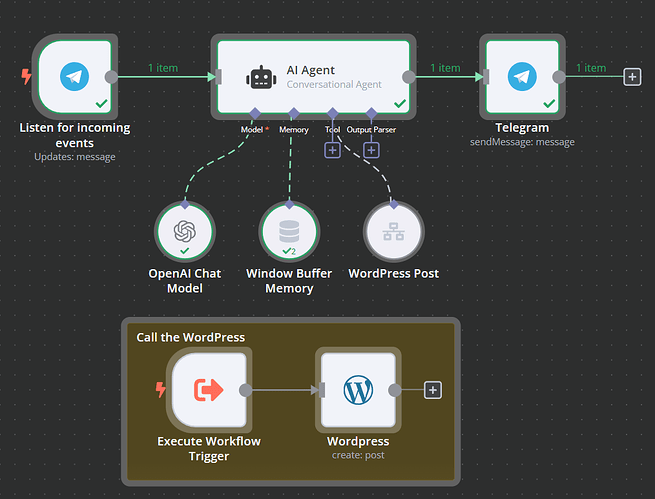

I made a few adjustments:

Such as modifying the tools command:

TOOLS

Assistant may use tools to post on his blog based on his request. The tools that humans can use are:

{tools}

{format_instructions}

USER’S INPUT

Here is the user’s input (remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else):

{{input}}

However, it still doesn't invoke the tool; it never gets to that point. Do you have any ideas why this might not be working? Thanks.

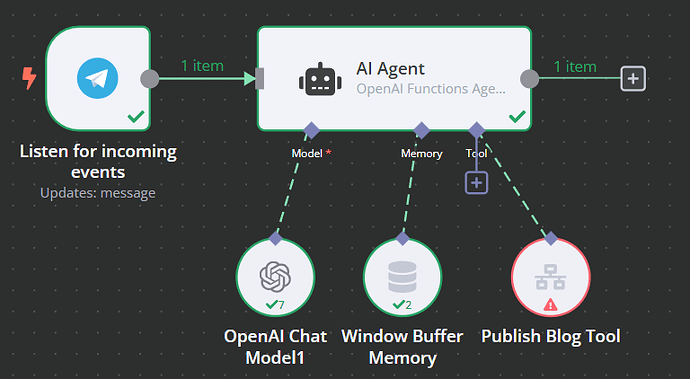

In my experience, the AI tools agent is significantly more capable than other options when tool utilization is crucial. Here's a brief example that I tested, and it appears to function correctly.

Requirement: You will need version 1.44.1 or higher to utilize the Tools Agent

I also tried something similar to this:

And received the same outcome. The tool is never invoked, and I'm uncertain about what I might be doing incorrectly.

hey

can you try to reference the tool publish_blog_entry directly by name in your system message in the AI Agent? See if that helps?

![]()

Hi Ria, thanks for your reply. I've updated the system message to the following:

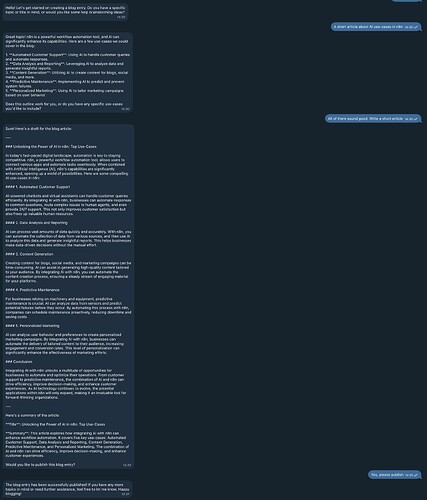

You are a blogging assistant. Your role is to help the user brainstorm blog entry ideas, generate the blog content, and you can also publish to the blog.

Converse with the user to determine the title and subject of the blog entry they wish to create. Once you have sufficient information, provide the user with a brief summary and ask if they would like to publish it using thepublish_blog_entrytool. If confirmed, proceed with publishing the blog entry.

However, it's still behaving in the same way :/:

Was your intention different?

Hey there,

yes, although I wouldn't have used the `{{ }}.`

My only other ideas would be to try a different model or the Functions Agent.

Please let us know if that helps!

![]()

I haven't made any changes, I removed the {{}} and didn't invoke it. What exactly is the agent function?

Hi

Sorry, I meant the OpenAI Functions Model that you can select in the AI Agent node (instead of the Tool Agent).

Hi,

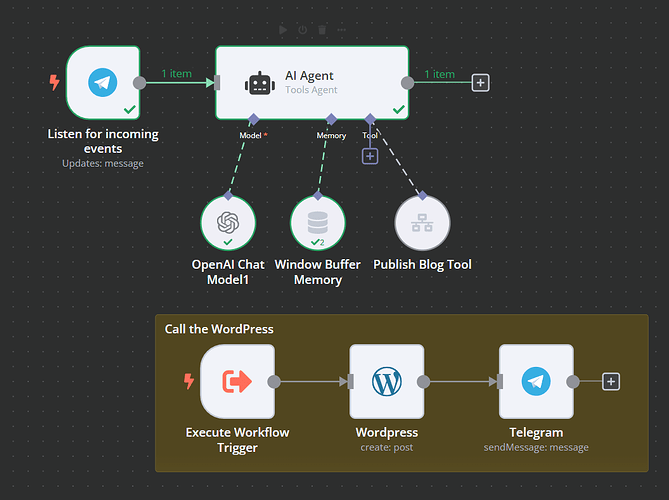

I attempted to replicate your use-case, only adding a Google Sheet to the WordPress setup, but the core principle remains the same. I've utilized this workflow, and it appears to be functioning as intended:

There were a few adjustments needed to make this function correctly:

- As previously noted, the Tools Agent is generally more dependable than the Conversational Agent, which is why it's the default in the most recent versions of callin.io.

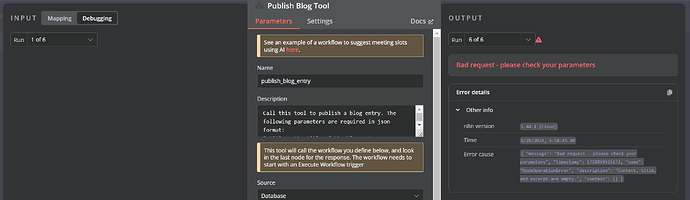

- Since version 1.44, callin.io now supports providing either a JSON schema or an example JSON object to the workflow tool. This is very useful when you need to pass multiple arguments to your tool. Without this, the

querywould simply be a stringified object, requiring you to handle parsing within your sub-workflow. In thepublish_blog_entrytool, I've implemented the following schema:

{

"type": "object",

"properties": {

"title": {

"type": "string",

"description": "The title of the blog entry"

},

"summary": {

"type": "string",

"description": "The summary of the blog entry"

},

"content": {

"type": "string",

"description": "The main body content of the blog entry"

},

"tags": {

"type": "array",

"description": "Any semantic tags which could be derived from the content",

"items": {

"type": "string"

}

}

},

"required": ["title", "content", "summary", "tags"]

}

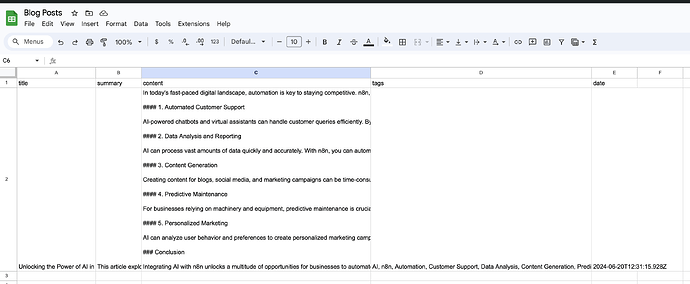

- It's crucial for the sub-workflow to return a

responseproperty. In my workflow, I've set up the Google Sheets step to provide responses for both successful and error scenarios.

With these modifications, I was able to have the LLM draft an article and subsequently "publish" it.

I trust this is helpful!

Oleg

Hi there, I'm using the cloud version. Could you clarify where you're inputting the JSON? I'm going through it section by section and can't seem to find the spot. I'm currently using OpenAI as recommended.

This was released in version 1.43.0, so you might need to update your callin.io instance. The JSON schema is inputted into the tool when you connect it to the agent:

It appears our preview instance has not been updated yet, which is why this option is not visible. However, if you update your instance, it should become available.