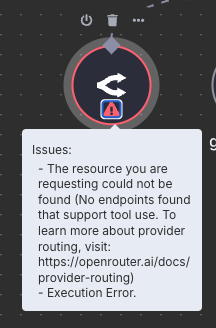

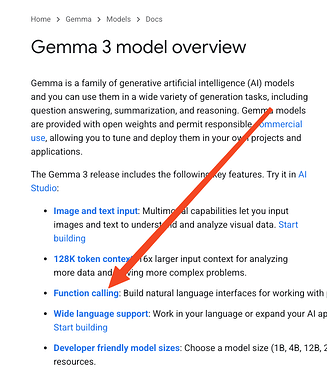

Other models that support tool calling function correctly in callin.io (Example gpt 4.1 mini), but gemma3 does not for some reason, even though it does support tool calling.

Source: Gemma 3 model overview | Google AI for Developers

AND

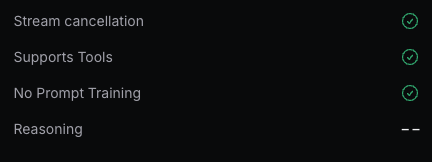

Source: OpenRouter

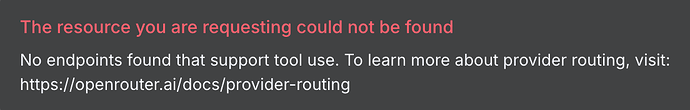

I also was not able to test it with the gemini model node.

Is this a gemma limitation, an OpenRouter limitation, or a callin.io limitation?

Also, are there any workarounds?

- callin.io Version: 1.95.2

- Platform: docker (self-hosted)

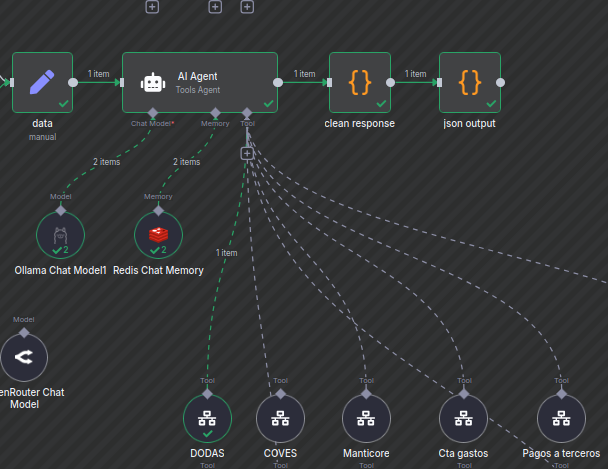

Hello! Function calling does indeed work with Gemma 3, but the main challenge lies in the prompting. I'm currently using Gemma 3 for RAG, and it functions, though not perfectly as anticipated. It does utilize the tools I've provided, but occasionally Gemma seems to disregard them. I suspect this is due to the need for a more refined "prompt." I must admit, I find working with Claude Sonnet 3.7 to be preferable.

Interesting

Does it also work for you via openrouter or just Ollama?

Both. I've noticed that Gemma 3 doesn't support a "system prompt", so you'll need to use "prompt user" for both the system and user prompts. You need to structure your prompt like this:

<start_of_turn>user

{{ Prompt }}</start_of_turn>

<start_of_turn>model

It looks a bit unusual, but that's how Gemma functions. Since I started using this format for my prompt, it began utilizing tools consistently, which is exactly what I was aiming for.

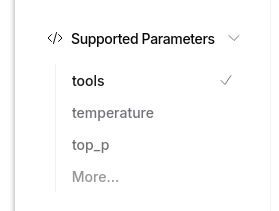

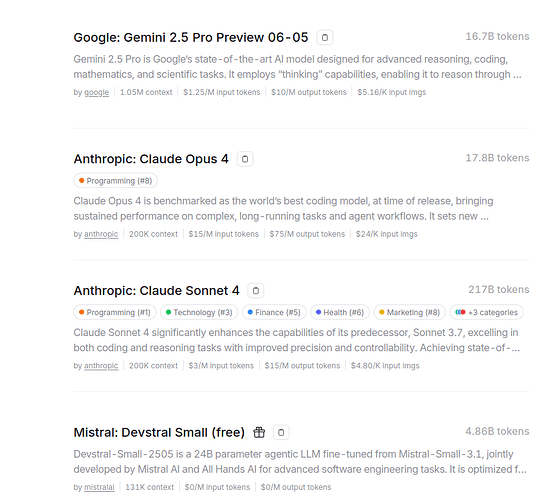

This is quite normal, as not all models available on OpenRouter offer tool support. It's advisable to filter for tool-compatible models directly on their website.

You can consult this list to find models that do support tools: Open Router Models with Tool Support

I'm still unclear about why Gemma 3 offers tool support, yet it's not accessible via OpenRouter.

Thanks for all the assistance!