Describe the problem/error/question

I am developing an AI Agent that utilizes Postgres for its memory storage, with a context window set to 15 messages. The Agent executes SQL queries against a Postgres database that contains tables for products and additional product details. In a typical interaction, when a user asks, “Find me a nice phone,” the Agent successfully retrieves a product and displays its information. However, when the user follows up with “I like it. Give me additional information,” the Agent fails to retain the previously retrieved product ID. This prevents it from fetching the corresponding additional information.

I am seeking guidance on best practices for storing session variables, such as product IDs, in these situations to maintain conversational context across multiple messages.

What is the error message (if any)?

There isn't a specific error message. The issue is that the product ID is lost between conversational turns, which leads to incomplete data retrieval.

Share the output returned by the last node

The output from the last node does not contain the expected product ID, preventing the Agent from querying the database for additional product information.

Information on your callin.io setup

- callin.io version: 1.82.3

- Database (default: SQLite): Postgres

- callin.io EXECUTIONS_PROCESS setting (default: own, main): own

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): Docker

- Operating system: Linux (Docker container)

That's an interesting point. I've considered this behavior previously but haven't dedicated enough effort to actively test a solution.

I'll offer a few suggestions, but I'm eager to hear other community members' thoughts:

1. A tool to store retrieved data alongside the memory

If you're utilizing external storage for memory, such as Redis or Postgres, you can modify the entries to include additional information.

The AI can be instructed to consistently store the retrieved information before responding to the user.

Alternatively, you could prompt it to save a summary containing the key details most relevant to the user's question. This approach allows for storing less data, resulting in a shorter prompt.

2. Use a temporary storage

Instead of using the same long-term session storage, you can employ Redis to temporarily store data retrieved during a session.

You can then consistently pass this information to the AI context (prompt).

This is quite similar to the first suggestion. The primary distinction is that you would store the data separately and only on a temporary basis, helping to maintain cleaner chat session datasets.

![]()

If my response addresses your question, please consider marking it as the solution.

Great ideas! I was considering either an enhanced memory node or a new connector type for the AI Agent node.

• Enhanced Memory Node: For example, the current Postgres Chat Memory only allows us to specify the table for persisting chats. It would be beneficial if we could define a session object that stores various information between messages.

• New Connector Type: Presently, we have Chat Model, Memory, and Tool connectors. If a Session connector were available, allowing us to attach a tool to manage the session object, it could provide even greater flexibility.

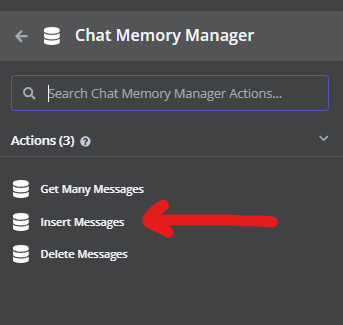

Understood, in that scenario, you can utilize this node:

If you convert your tool into a sub-workflow and invoke it using the “Call callin.io workflow tool” node, you can implement logic within it to save the tool's output as part of the chat session.

An alternative approach would be to create a sub-workflow named “Store tool information,” integrate it as a tool, and instruct the AI Agent to consistently use this tool for saving brief, relevant details to facilitate future referencing during the chat session.

For instance:

“Employ this tool to archive minor pieces of information retrieved from other tools, such as a product_id, ensuring that if the user inquires about it again, you already know which product they are referring to.”

Will this resolve your issue?

This thread was automatically closed 90 days following the last response. New replies are no longer permitted.