Describe the problem/error/question

When running a workflow that processes a significant amount of data, including approximately 200 PDF files and over 10 OpenAI API calls, the process takes about 2 hours to finish. This is because each OpenAI execution takes around 10 minutes due to the current splitting method. I'm frequently encountering "Connection lost" and "Unknown errors" during execution.

What is the error message (if any)?

The connection was aborted, perhaps the server is offline [item 4]

Please share your workflow

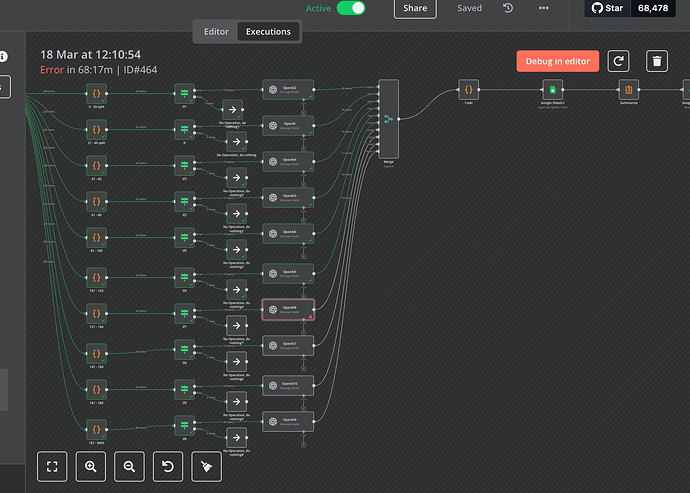

It's too large to share (over 34000 characters), but this is the problematic area.

Share the output returned by the last node

The connection was aborted, perhaps the server is offline [item 4]

after 68 minutes: Imgur: The magic of the Internet

Information on your callin.io setup

- callin.io version:1.80 I just upgraded to 1.83

- Running callin.io via : callin.io cloud

Based on the error message, it appears the OpenAI server returned an error, not your callin.io workflow. Would you be able to share a screenshot of the specific error?

Here are the error details:

Error details

From OpenAI

Error code

ECONNABORTED

Full message

timeout of 300000ms exceeded

Other info

Item Index

7

Node type

@callin.io/callin.io-nodes-langchain.openAi

Node version

1.8 (Latest)

callin.io version

1.83.2 (Cloud)

Time

3/19/2025, 12:59:46 PM

Stack trace

NodeApiError: The connection was aborted, perhaps the server is offline at ExecuteContext.requestWithAuthentication (/usr/local/lib/node_modules/callin.io/node_modules/callin.io-core/dist/execution-engine/node-execution-context/utils/request-helper-functions.js:991:19) at ExecuteContext.requestWithAuthentication (/usr/local/lib/node_modules/callin.io/node_modules/callin.io-core/dist/execution-engine/node-execution-context/utils/request-helper-functions.js:1147:20) at ExecuteContext.apiRequest (/usr/local/lib/node_modules/callin.io/node_modules/@callin.io/callin.io-nodes-langchain/dist/nodes/vendors/OpenAi/transport/index.js:22:12) at ExecuteContext.execute (/usr/local/lib/node_modules/callin.io/node_modules/@callin.io/callin.io-nodes-langchain/dist/nodes/vendors/OpenAi/actions/text/message.operation.js:230:21) at ExecuteContext.router (/usr/local/lib/node_modules/callin.io/node_modules/@callin.io/callin.io-nodes-langchain/dist/nodes/vendors/OpenAi/actions/router.js:75:34) at ExecuteContext.execute (/usr/local/lib/node_modules/callin.io/node_modules/@callin.io/callin.io-nodes-langchain/dist/nodes/vendors/OpenAi/OpenAi.node.js:16:16) at WorkflowExecute.runNode (/usr/local/lib/node_modules/callin.io/node_modules/callin.io-core/dist/execution-engine/workflow-execute.js:681:27) at /usr/local/lib/node_modules/callin.io/node_modules/callin.io-core/dist/execution-engine/workflow-execute.js:913:51 at /usr/local/lib/node_modules/callin.io/node_modules/callin.io-core/dist/execution-engine/workflow-execute.js:1246:20

Based on that, it appears OpenAI did not respond within the 30-second timeframe. My suspicion is that it detected an excessive number of requests originating from your callin.io instance's IP address, leading to a temporary throttling.

Any solutions?

Please provide the rewritten markdown content; it should be in the markdown format.

I would suggest first verifying with OpenAI support if that is indeed the actual problem. Everything I've shared above is an assumption, as I can't determine why their server responded with a timeout; only they can provide that information. Once it's confirmed to be the same issue, we can then proceed to work towards a resolution. Alternatively, if OpenAI indicates it's a different problem, we will need to adjust our approach.

Essentially, I had to host callin.io myself, and then I resolved this issue. In the callin.io cloud version, I believe they implement a timeout that can be disabled in the self-hosted deployment.

This discussion was automatically closed 7 days following the last response. New replies are no longer permitted.