Describe the problem/error/question

I'm looking to understand how to utilize AI tools, and I suspect my limited coding knowledge might be a contributing factor to my difficulties. However, I need a clear example to grasp how these tools function.

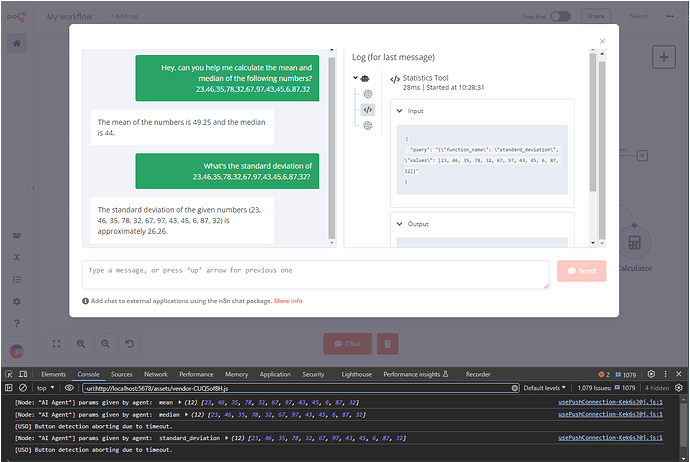

I attempted to perform basic calculations using the "calculator tool" but found it to be limited. When I tried to create a custom tool, the behavior differed, and it didn't work as expected. The agent calls the calculator tool with a syntax error when a calculation is required. In contrast, the custom tool only sends a "query" and doesn't adhere to the instructions in its description.

I've experimented with (ollama) models like llama3:8b, llama3:70b, and phy3.

I haven't found any examples or information on the correct usage.

Please share your workflow

This is the workflow I'm using to learn and experiment with AI. Perhaps it will provide more clarity on my issue:

Share the output returned by the last node

Wrong output type returned>

The response property should be a string, but it is an object

Information on your n8n setup

- n8n version: 1.42.1

- Database (default: SQLite): default

- n8n EXECUTIONS_PROCESS setting (default: own, main): default

- Running n8n via (Docker, npm, n8n cloud, desktop app): Docker

- Operating system: Debian 12

Sure, I can try to explain.

1. Help Agents understand and pick their tools.

I've noticed a common pitfall where we focus too much on the "how" of a tool rather than its "why." Let's roleplay for a moment: imagine you're an agent and your available tools are like the entire AWS services catalog (over 100 services!). You're asked to host a WordPress site – daunting, right? Where would you even begin? I believe you'd want to start with very clear descriptions of the problems each service (tool) solves, rather than getting bogged down in the internal workings of each. This is how I view agent tools: the clearer the tool's purpose, the more likely the agent will identify it as the best option for achieving its goal.

- Tool names should be descriptive.

"get_mean_median_or_standard_deviation","simplify_quad_equation","create_notion_task"

"MathStatistics","myTool","UploadServicesProcess"

- Tool descriptions should outline their purpose.

"Call this tool to calculate the mean","Call this tool to send a message","This tool creates group calendar events"

"This tool outputs json...","You must split messages into an array...","This tool calls 3 functions..."

2. Agents don’t execute the tools; they invoke them.

Think of tool invocation like calling a third-party API, such as AWS S3. When you want to put an object into S3, you don't necessarily need to know the internal processes or the specific datacenter used. You just need to know how to provide the file to the API and confirm its successful upload. It's the same with agents; understanding the intricacies of how you calculate standard deviation isn't useful to them, but knowing which parameters to pass is crucial!

- Define API-like request parameters for the tool.

Use the Input schema to define request parameters (version 1.43+) or define them in the description.

Use the Input schema to define request parameters (version 1.43+) or define them in the description. Alternatively, define them in the tool description:

Alternatively, define them in the tool description: { "function_name": { "type": "string" }, "values": { ... } }"

"Call method sin() to perform sine operation","if this ..., then do that ..."

3. If your tools are still not being invoked, it might be your LLM

Finally, the LLM model used for the agent significantly impacts performance. I've found that more powerful models lead to better tool recognition and parameter generation, resulting in overall improved "reasoning." I suspect that with more numerous or complex tools, more context will be required to prevent hallucinations.

- Keep tools simple and sparse.

Prefer state-of-the-art models with larger context windows.

Prefer state-of-the-art models with larger context windows. Different models may respond better to different prompt styles. Don't expect to copy and paste the same prompt across models.

Different models may respond better to different prompt styles. Don't expect to copy and paste the same prompt across models. Too many tools? Consider splitting into multiple single-domain agents.

Too many tools? Consider splitting into multiple single-domain agents. Too complex? Break down tools into smaller, more manageable tools.

Too complex? Break down tools into smaller, more manageable tools.

Conclusion

Understanding AI tools is essential for building effective AI Agents, and once you grasp it, you can achieve many exciting things with them. callin.io makes it incredibly easy for anyone to build and test their own agents and tools, and the rapid iteration cycles are why I'm such a big fan!

Cheers,

Jim

Follow me on LinkedIn or Twitter

(Psst, if anyone else found this post useful… I help teams and individuals learn and build AI workflows for

![]()

! Let me know if I can help!)

Demo

Thanks a lot for the explanation! Best regards!!

This thread was automatically closed 90 days after the last response. New responses are no longer permitted.