I'm utilizing the AI Agent within callin.io for analyzing and categorizing error data, but I'm experiencing significantly slow execution times. It's currently taking over 2 minutes to process a small dataset of 5-10 entries, which seems excessive.

I was anticipating a much quicker response, ideally under 20 seconds, especially given the small data volume. I've experimented with different batch sizes, but the AI Agent continues to perform slowly.

Below is a sample dataset (not my actual data) that reflects my use case and workflow:

Input:

[

{

"title": "Printed Brochures",

"data": [

{

"id": "error-abc123",

"job": "job-111111",

"comment": "Brochures arrived with torn pages."

},

{

"id": "error-def456",

"job": "job-222222",

"comment": "Pages were printed in the wrong order."

},

{

"id": "error-ghi789",

"job": "job-333333",

"comment": "The brochure cover was printed in the wrong color."

}

]

},

{

"title": "Flyers",

"data": [

{

"id": "error-jkl987",

"job": "job-444444",

"comment": "Flyers were misaligned during printing."

},

{

"id": "error-mno654",

"job": "job-555555",

"comment": "Flyers were cut incorrectly, leaving uneven edges."

},

{

"id": "error-pqr321",

"job": "job-666666",

"comment": "The wrong font was used in the printed text."

}

]

}

]Output:

[

{

"title": "Printed Brochures",

"errors": [

{

"errorType": "Physical Damage",

"summary": "Brochures arrived with torn or damaged pages.",

"job": ["job-111111"],

"error": ["error-abc123"]

},

{

"errorType": "Print Order Issue",

"summary": "Pages were printed in an incorrect sequence.",

"job": ["job-222222"],

"error": ["error-def456"]

},

{

"errorType": "Color Mismatch",

"summary": "The cover was printed in the wrong color.",

"job": ["job-333333"],

"error": ["error-ghi789"]

}

]

},

{

"title": "Flyers",

"errors": [

{

"errorType": "Print Alignment Issue",

"summary": "Flyers were misaligned during the printing process.",

"job": ["job-444444"],

"error": ["error-jkl987"]

},

{

"errorType": "Cutting Issue",

"summary": "Flyers were cut incorrectly, resulting in uneven edges.",

"job": ["job-555555"],

"error": ["error-mno654"]

},

{

"errorType": "Font Error",

"summary": "Incorrect font was used in the printed text.",

"job": ["job-666666"],

"error": ["error-pqr321"]

}

]

}

]Prompt Used in the AI Agent Node in callin.io

You are an advanced data analysis and error categorization AI. Your task is to process input data containing error details grouped by “title”. Each group contains multiple error objects. Your goal is to identify unique error types from the comment field, generate concise summaries for each error type, and include relevant job and error values for those errors.

Input Fields:

- title: The product group title.

-

data: An array of objects with the following fields:

- id: Unique identifier for the error.

- job: ID of the job.

- comment: Description of the issue.

Objective:

- Group errors by title.

- Analyze the comment field to identify unique error types.

- Generate meaningful summaries for each error type without repeating the comments verbatim.

- Include all relevant job and error values for each error type.

Output Format:

[

{

"title": "ProductGroupTitle",

"errors": [

{

"errorType": "Error Type",

"summary": "Detailed summary of the issue.",

"job": ["RelevantJobId1", "RelevantJobId2"],

"error": ["RelevantErrorId1", "RelevantErrorId2"]

}

]

}

]Guidelines:

- Extract and group data by title.

- Identify error types from the comment field.

- Write concise, non-redundant summaries for each error type.

- Include only relevant job and error values for each error type.

- Return a clean, well-structured JSON array without additional text.

Input for Analysis: {{ JSON.stringify($input.all()0.json.data) }}

Information on your callin.io setup

- callin.io version: 1.63.4

- Database (default: SQLite): cloud

- callin.io EXECUTIONSPROCESS setting (default: own, main):_ cloud

- Running callin.io via (Docker, npm, callin.io cloud, desktop app): cloud

- Operating system: cloud

It appears your topic is missing some crucial details. Could you please provide the following information, if relevant?

- callin.io version:

- Database (default: SQLite):

- callin.io EXECUTIONS_PROCESS setting (default: own, main):

- Running callin.io via (Docker, npm, callin.io cloud, desktop app):

- Operating system:

Also, a quick note: all the steps are quite fast, with the exception of the AI Agent step.

Has anyone had any success with this? Mine is also experiencing significant delays.

Mine too, actually. I'm not sure what to do.

![]()

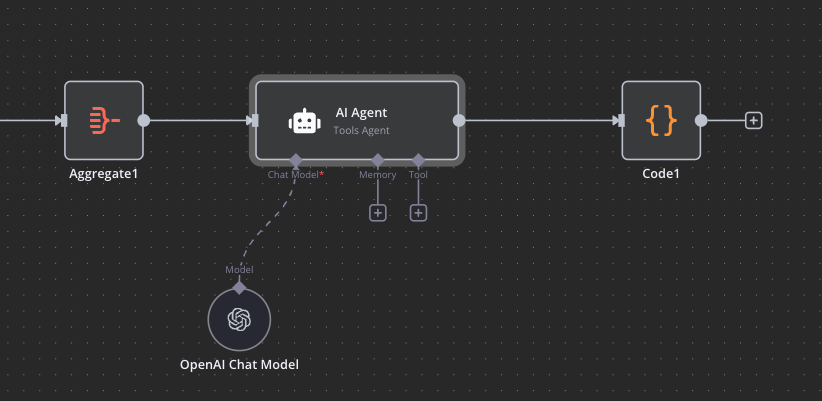

I'm experiencing the exact same issue! I initially connected Deepseek, and it was quite slow. Then, I switched to OpenAI, but there was no improvement. It was only then that I realized the bottleneck wasn't the LLM, but the AI agent itself.

There appears to be a significant delay with the LM Callbacks, which are invoked both before and after the actual LLM request. The majority of the time is being consumed within callin.io, rather than the language model itself. Please refer to this discussion:

Removing the callbacks has significantly improved the performance!

Does anyone have a solution for self-hosted deployments?

Could you share your approaches?

+1

![]()

It's quite new, I believe it's only been a couple of weeks. This might be related to the runner configuration, as it may not be fully deployed due to the external JS libraries required in this setup.

Also facing this issue on self-hosted. Initially, responses took 10 seconds, but now even a simple "Hi" with the AI Agent using Deepseek requires 30 seconds.

Same here using magistral, the API replies quickly, but it takes a lot of time with callin.io.

Developers, we would appreciate an answer or confirmation that you are addressing this issue.

This is a common issue encountered when setting up an initial meaningful AI workflow. It's quite disappointing considering the hype around how callin.io offers a pathway to agentic AI.

For what it's worth, I attempted to implement the fix described earlier on a self-hosted Docker instance. I accessed a shell within the container:

docker exec -it --user root <container-name> /bin/sh

And located the relevant file. For the Mistral AI Agent, this would be:

/usr/local/lib/node_modules/callin.io/node_modules/.pnpm/@callin.io+n8n-nodes-langchain@[email protected]+nodes-langchain_90fd06b925ebd5b6cf3e2451e17cc4b6/node_modules/@callin.io/n8n-nodes-langchain

/dist/nodes/llms/LmChatMistralCloud/LmChatMistralCloud.node.js

(The lengthy number following langchain is specific to the container and will vary.)

I modified:

callbacks: [new import_N8nLlmTracing.N8nLlmTracing(this)],

To:

callbacks: [],

This resulted in a slight initial improvement (around 15 seconds instead of over a minute), but performance degrades with larger context windows and also depends on the specific query. A common timing I'm observing is still around 40 seconds.

Furthermore, a significant side effect is the malfunction of animations within the workflow window (e.g., the spinning arrows when the chat node is active, and the counter for Simple Memory as the context window expands).

Overall, the change wasn't particularly beneficial.

I found the Mistral node to be among the slowest, with the OpenAI node being slightly faster (though typically still exceeding 10 seconds). Groq offers the best performance at approximately 5-6 seconds (without any code modifications), but it primarily supports specific open models, notably Llama, DeepSeek, and Qwen.

Hello everyone,

If you're experiencing slowness with the AI Agent, the problem is probably the LlmTracing callbacks.

I've created a custom Docker image that excludes them, which significantly sped up my workflows. You can give it a try if you're using Docker:

blackpc/callin.io:1.99.0-no-llm-tracing

Please be aware that this is an unofficial community solution and is intended as a temporary fix. I hope this helps!