I’m encountering an issue with an AI Agent workflow and would appreciate some assistance.

My current setup involves:

- Extracting text from a document using OCR (this document contains multiple transactions, similar to a bank statement).

- Sending this extracted text to an AI Agent node (utilizing Mistral).

- The AI Agent is configured with the Google Sheets tool, and its objective is to identify each debit transaction and add it as a distinct row in my spreadsheet via this tool.

The core problem arises when the data is sent to Google Sheets: it consistently overwrites the existing data on the same row. It fails to append new rows for subsequent transactions identified in later executions.

I've tried several approaches to resolve this:

- Experimenting with different prompts.

- Adjusting the formatting of the Google Sheet (e.g., setting columns to numeric or plain text).

- Implementing other suggestions found on this forum.

- Utilizing both explicit instructions for the Google Sheets tool within the prompt (such as instructing it to “create a new row”) and what might be considered automatic “append row” features.

Regrettably, none of these attempts have resolved the issue – the data continues to overwrite the same row.

Has anyone else faced a similar situation? Do you have any suggestions on how to ensure the Google Sheets action (triggered by the AI Agent) appends new rows correctly rather than overwriting existing ones?

I believe the AI agent, by default, reverts to updating A2 or the first empty row it finds. It doesn't seem like the AI agent can retain the current index of the Google Sheet. What if you allowed the Agent to simply output parsed data, and then you appended to the Google Sheet outside of the agent using a Google Sheet append node with callin.io?

Yes, I've experimented with that approach as well. The main challenge appears to be handling over 10 entries arriving within the same second, and I'm uncertain about Google's internal processing for such rapid inputs.

I've already attempted to extract the data from the agent and send it to Google Sheets individually, but the process remains too quick. Introducing a delay only slows down the entire operation uniformly, without resolving the fundamental problem.

Do you have any alternative suggestions I could explore?

I suspect you're processing records in parallel for Google Sheets. I don't believe Google offers row-level isolation in the same way a database does. Have you considered using SplitInBatches with a batch size of 1? This approach would emit one transaction at a time, allowing that single transaction to flow to the Google Sheets append row node. After processing, incorporating a wait node with a delay might resolve your issue.

I'm encountering the same issue and haven't found a solution yet. My current workaround involves having the AI report after each individual step. Here's the prompt I'm using:

You are a workflow agent tasked with processing LinkedIn contact search results and writing them to Google Sheets, one contact at a time.

TASK OBJECTIVE

Your mission is to ENSURE that each contact is APPENDED INDIVIDUALLY to the Google Sheet. ONLY AFTER the write operation completes, you must CONFIRM success to the user and THEN proceed to the next contact. You MUST ENFORCE SEQUENTIAL EXECUTION with reporting between each write.

INPUT PARAMETERS

- Keyword: {{ $json["keyword"] }}

- maxResults: {{ $json.maxResults }}

- account: {{ $json.account }}

STEP-BY-STEP EXECUTION (CHAIN OF THOUGHTS)

- UNDERSTAND the task: You need to first search for contacts using LinkedIn’s API and then write each result to Google Sheets individually.

- BASICS: You will receive a batch of contacts. You are to process them one at a time.

-

BREAK DOWN the task:

- Step 1: Invoke the LinkedIn Search Contacts API using the provided keyword.

-

Step 2: For EACH contact retrieved:

- WAIT for the Google Sheets Append operation to finish.

- ONCE CONFIRMED, send a message:

"✅ Contact [Name or ID] successfully written to Google Sheets." - THEN move on to the next contact.

-

ANALYZE: If you do not wait for each Google Sheets node to complete, you risk having multiple contacts written to the same row or contacts being skipped due to concurrency issues.

-

BUILD: Your loop MUST:

- Execute the Google Sheets append operation synchronously.

- Await confirmation before proceeding to the next step.

- Log or report the success for each entry before continuing.

-

EDGE CASES:

- IF a contact fails to write, CATCH the error, LOG it, and CONTINUE to the next contact.

- DO NOT halt the entire process due to a single error.

-

FINAL BEHAVIOR: The agent must process entries in strict sequence, reporting success for each individual entry.

ENFORCED RULES

- NEVER batch or parallelize writes to Google Sheets.

- ALWAYS WAIT for each append operation to complete before reporting or proceeding.

- ALWAYS report success explicitly after each individual entry is processed.

EXAMPLE FLOW

- The search operation returns 5 contacts.

- The first contact is written → Google Sheets confirms the write → The agent reports success → The agent proceeds to the second contact.

- This process repeats until all contacts have been processed.

I encountered a comparable issue and successfully resolved it without heavily depending on the AI agent/models. Regrettably, you must remove the Sheets tool from the agent, as it was unable to manage it correctly. You need to direct the agent to output the result in JSON format, using "column_name": "value" as in a standard JSON object. Unfortunately, I haven't discovered how to prevent it from prepending json and appending ```` to the output—if anyone has found a solution, please share. I circumvented this by incorporating a "function block" and a basic JS function to remove those extraneous characters. This is required so that the "Split Out" block can segment the data into individual table rows, which I then fed into the Sheets block, mapping the values from the JSON.

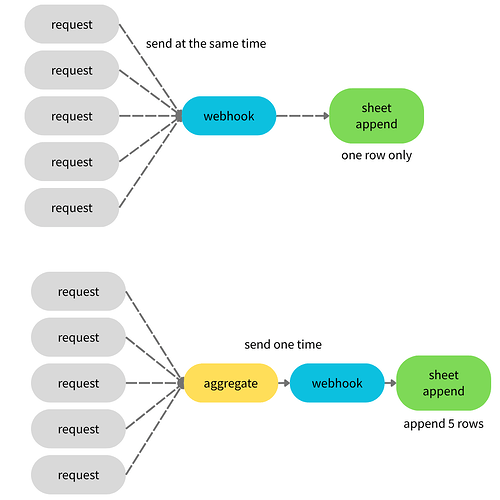

I'm encountering a similar issue. Webhook events are being received by a Google Sheet via a dedicated service account. When events arrive within a 5-second interval (which happens frequently), Google Sheets overwrites existing data, even when the Google Sheets node is set to 'Append only' mode. The data vanishes, despite being visible in the execution logs. We're observing numerous bugs across various node types; callin.io appears to be riddled with issues and is not yet production-ready.

Perhaps the issue stems from the LLM Model. Have you experimented with GPT 4.1? It's a capable model for code and tasks (consider the 4.1 mini if cost is a concern).

Here's my testing on this topic.

Just resolved another client's issue yesterday.

If there is a service call to Google Sheet that appends multiple times almost simultaneously, the problem will occur, with only one row being appended.

For an AI Agent, I believe we need to instruct it not to append multiple times.

Let it collect all the results and then append them once with multiple rows.